The Deep Roots of Infra-Low Frequency Training

by Siegfried Othmer | September 23rd, 2013 On first acquaintance, infra-low frequency training requires a lot of explanation because it seems to stand apart from conventional neurofeedback. If that were really the case, however, it would likely not have emerged out of this field at all. Infra-low frequency training did not burst full-grown upon the scene like Venus out of a lotus blossom. Its roots are traceable to the standard SMR training developed by Sterman. It is instructive to review this history and to retrace the path of discovery.

On first acquaintance, infra-low frequency training requires a lot of explanation because it seems to stand apart from conventional neurofeedback. If that were really the case, however, it would likely not have emerged out of this field at all. Infra-low frequency training did not burst full-grown upon the scene like Venus out of a lotus blossom. Its roots are traceable to the standard SMR training developed by Sterman. It is instructive to review this history and to retrace the path of discovery.

Signal-following feedback

The emergence of ILF training rests on three essential elements that take us back to the very origins of the field. The most basic of these was an emphasis on the continuous signal rather than the discrete rewards. This was what first set us apart from Sterman’s continuing emphasis on the discrete rewards as the defining issue in operant conditioning. Well before all of these issues were clarified in our own minds I had the relevant experience on my own head during my first EEG training sessions in Margaret Ayers’ office in Beverly Hills in 1985. My EEG has rather low amplitude and was therefore incapable of routinely triggering the beta1 threshold even at the most sensitive setting on the instrument. There was nothing for me to do but to watch the continuous ebb and flow of the band amplitude. My brain responded nicely.

The instrument Ayers was using had been developed for Sterman’s research, so credit must go to Sterman for having thought to provide the continuous feedback option on his instrument in the first place. But the rationale for his doing so are not clear, since Barry never bought into the idea of training on the continuous signal. Sterman first inquired into our new methods a couple of years ago, and on that occasion asked the fateful question: “With such a slow signal, what is your latency to a reward?” My response was straight-forward: “There is no reward. The trainee is just watching the signal.” That is really no different from what I had done in my very first encounter with this field.

So EEG-training by means of “signal-following” can claim no novelty tied uniquely to the ILF training. (In fact, for the first promotion of proportional feedback we have to go all the way back to Joe Kamiya.) The role of the discrete reward had been eclipsed for many years already in our work. When it finally became totally irrelevant, it was hardly missed. In the early years clinician behavior was shaped to make the reward incidence ever more generous, in order to retain the attentions of the distractible ADHD child. Once reward incidence reached the ridiculous heights of 75%, 85%, and even 95%, it was no longer playing the role that Sterman and Lubar had in mind. They were continuing to insist that rewards be meted out sparingly, consistent with the operant conditioning model. In our kind of training, the object had become one of maintaining continuity in the ‘successful’ state—a continuous flow of “beeps” coming at a rate of two per second. In fact, there had been a kind of role reversal. The rare event that drew attention was when the reward stopped. The discrete reward had effectively assumed the role of an inhibit.

Bipolar Montage

The second element that was essential to the emergence of ILF training was the use of bipolar montage. It should not be necessary to make the case for the use of bipolar montage, since the entirety of Sterman’s published research utilized that placement. But that ‘fact of history’ seems to have been air-brushed out of the picture, and controversy did emerge around that issue. That bit of history needs to be demystified. Barry made the switch to referential placement as part of the adoption of QEEG-based targeting in the early nineties, since the QEEG made specific targeting possible. The prior research history became mere prologue. The switch to QEEG-based protocols also had other more subtle effects on the way neurofeedback was thought about in those years. The localization hypothesis of neuropsychology was the implicit assumption of the approach, and supported the case for referential placement. If what happens at a single site is the issue, then bipolar montage needlessly confuses matters with ambiguities. Just what is changing in the signal, amplitude or phase, and at which site? How do we know what we are really doing?

More surreptitiously, the QEEG-based approach brought with it certain assumptions about how EEG data should be treated ‘scientifically.’ This meant looking at the signal for its ‘central tendency,’ its steady-state or stationary values. The band-limited signals were treated as Gaussian-distributed, which then led to elevation of normative behavior as an objective in training. This also meant using methods that discriminated against the obvious variability we see in the real-time EEG, and that led to the use of large sampling intervals and to the averaging of many samples. Variability of the EEG had become the contaminant in the broth, rather than the broth itself. Seen from that vantage point, a focus on the moment-to-moment change in the EEG in feedback seemed contrarian at a minimum, and perhaps roguish and even bizarre at worst.

And yet we were getting results, as indeed we had been all along with the very same strategy. We did not see a need to defend a strategy that had worked well for us all along. Yet we were up against the presumption that the QEEG-based training was intrinsically more ‘scientific.’ Given the ‘second-class’ status of the field at the time, working according to scientific principles was the least that one should aspire to do. For some years, QEEG-based training was essentially mandated by the mandarins of the field.

Old-timers may recall the controversy at the time regarding the issue of providing ‘real-time’ feedback. It became a battle between digital filtering of the signal and transform-based approaches. By now history has rendered its verdict. The transform-based systems, Lexicor and Autogenics, fell by the wayside after a few years, and the NeuroCybernetics we had designed for Ayers originally came to dominate the marketplace in the nineties.

The Optimization Procedure

In those early days all neurofeedback strategies had their limitations. None enjoyed more than limited success. Lubar’s response to this was to be highly selective in choosing candidates for the training, helping to assure a good outcome. We proceeded on the assumption that everyone’s brain was trainable. The pressure was therefore always on to refine and improve our methods. This led us then to the third element that distinguishes our approach from that of others: the optimization procedure. The effects of the training were found to be highly sensitive to the specific choice of reward frequency in some people, compelling us to make adjustments.

The awareness that training effects were a function of reinforcement frequency was nothing new, of course. But this was thought to be the case at a coarse level of the standard bands, of alpha, SMR, beta1, etc. No one expected that sensitivity to prevail at a level of 0.5 Hz or even less. This was something new. And this had only become observable under circumstances where the brain had been given information on the EEG with all of its associated dynamics.

It is important to take note of the trade-off here. In the standard operant conditioning model, the contingencies are more tightly under the therapist’s control, and the outcome is then more specifiable. When we give the brain much more information to deal with per unit time, how the brain interprets and acts upon that information is less predictable. And yet when the optimum reinforcement parameters have been established for a particular person, there are several fairly predictable consequences.

First of all, we can count on a certain stability of the value that we determined to be optimal. It would likely be much the same the next day and the next week. Secondly, if one deliberately moves off the optimum toward higher frequencies, one would likely move the person to higher arousal levels, whereas one could move the person to lower arousal levels by moving in the opposite direction. This finding gave support to the arousal model around which we were organizing our thinking. Also implicit in this finding was the existence of an underlying fine structure to the EEG that was not being discerned in the customary measurements. And finally, this called into question the attempt to stand above the fray, so to speak, and prescribe neurofeedback on the basis of data that had had all the vitality sucked out of it.

This last point was perhaps the most discomfiting. Neurofeedback emerged in the heyday of the prescriptive model of modern medicine. The only way in which recognition from the mainstream was ever going to be achieved was with an approach that led to reasonably predictable outcomes. And yet here we had a method where it was essential to be guided in real-time by the clinical results. The only thing predictable here was the process, not the immediate impact of the intervention. What could be largely counted on was that the reinforcement on the real-time EEG would lead to shifts in the person’s state of arousal, vigilance, autonomic balance, etc. One could not readily predict what these shifts would be—-particularly among those who arrived at our door with highly disregulated nervous systems.

The induced state shifts were the ubiquitous observable that would then guide the process toward the calmest and most euthymic state of which that nervous system was capable—with maintenance of alertness. This then constituted the most propitious state for the conduct of the training. It was our consistent observation that if training could be conducted under such conditions, then the client was also moving toward the resolution of his clinical complaints. There was congruence between the conditions of homeodynamic equilibrium in the moment and the conditions required to achieve improved self-regulatory status over the longer term. Consider this in the perspective of state-dependent learning: residence in the best-regulated state is also the best platform for the learning of self-regulation. In any event, this gave us a real-time guide to the conduct of the training even in the absence of any a priori prescription.

It is only with all three elements in place that the training strategy expanded from the relative confines of the SMR-beta bands to cover the entire EEG spectrum out to 40 Hz, and then even encroached upon the infra-low frequency region. It is important to observe that essentially the same strategy serves, and hence the same rules apply, across the entire spectrum, from the lowest frequencies that are of biological relevance (e.g., diurnal rhythms) all the way out to the gamma band. The implication is that there is a fine structure to the EEG that has yet to be explored, and that within the above paradigm optimal training conditions prevail throughout the band.

Frequency Domain Rules

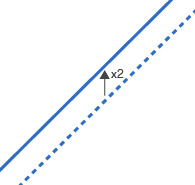

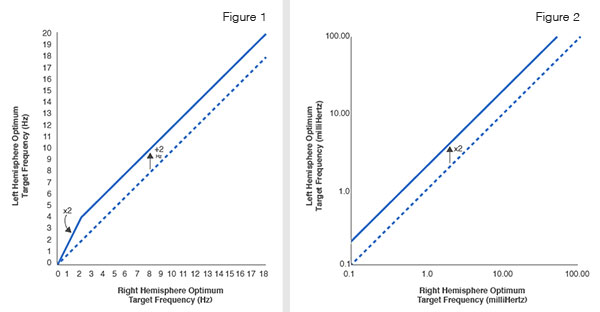

The best testimony to the fact that a unitary model applies across the band is given by the frequency rules that govern the optimization procedure. It has been clear since the early days of our SMR/beta protocols that the left hemisphere optimizes differently from the right. For years we taught the dual protocol of admixing “C3beta” training on the left with “C4SMR” training on the right. The standard bands that we had inherited from prior work by Sterman and Lubar were 3 Hz apart. With the optimization procedure in place, it turned out that the optimum separation was really 2 Hz rather than 3. And this value holds throughout the range from 2 Hz to 40 Hz. At lower frequencies yet another rule has been observed: the left hemisphere trains optimally at twice the frequency of the right. This rule holds over four orders of magnitude in frequency below 2 Hz. The two rules converge with mutual consistency at 2 Hz on the right side. These frequency rules are perhaps the most solidly established “facts” of the neurofeedback field, and the universality of the rules in turn constitutes the best evidence for the range of validity of the model, which jointly covers more than five orders of magnitude in EEG frequency. The frequency rules are graphically represented in Figures 1 and 2.

Figure 1 illustrates the rules relating optimum left hemisphere response frequency to the optimum right hemisphere response frequency over the conventional spectral range of the EEG. Note the breakpoint at 2 Hz, which represents the transition into the low frequency region.

Figure 2 illustrates the multiplicative relationship that exists between the optimum response frequency for the left and the right hemispheres in the infra-low frequency regions. This relationship has been corroborated over four orders of magnitude in target frequency, from 0.1 mHz to 2 Hz. Note the logarithmic format.

In the early days, starting in the late eighties, we were busy training mostly ADHD kids, and these are by and large monumentally insensitive to their own state, and largely hopeless in reporting on their own state. It is only when the clinical agenda broadened to include migraines, pain conditions, the anxiety/depression spectrum, and many other types of dysregulation that the optimization strategy even became viable. At the same time it also became a necessity. One could not be casual in the deployment of these methods.

Migraines, being exquisitely responsive to this kind of training, were the principal stalking horse. At the optimum reward frequency, a migraine could be sent onto a path of resolution within mere minutes. At the same time, migration away from the optimum might well kindle a migraine. The training strategy served to shape clinician behavior. One did not abandon the responsive client. With a sensitive client in the chair, one did not even step out for a cup of coffee.

It was the sensitive and responsive client who drove the frontier progressively toward ever lower frequencies. Every step of the way aroused controversy within the field. We were training in the theta band! (Hadn’t Lubar shown that was a bad idea?) We were training delta! (Hadn’t Sterman said there isn’t any such thing in the waking EEG?) And then came the infra-low frequency region, which compelled the abandonment of conventional threshold-based training for pure waveform-following. (‘Is that even possible?’ was the question in the minds of many.)

The Infra-Low Frequency Domain

Throughout all this time, the rules of engagement remained much the same. The ‘system response’ was qualitatively similar, regardless of the target frequency. But we extended our effectiveness to more severely compromised nervous systems as we went lower. What, then, is the driver toward the low-frequency training? Or, conversely, whom were we not reaching at the higher frequencies? With each type of symptom we observe a distribution in severity, and within each of them we were reaching greater levels of severity than before. The common element was usually a compromised early childhood neurological or psychological history. The common manifestation was then typically one or another profound emotional disregulation.

The infra-low-frequency training is giving us preferential access to the network organization of emotional regulation, and concomitantly of autonomic regulation, and of central arousal. At the infra-low frequencies, we are witnessing the time course of activation—and of connectivity—of our intrinsic connectivity networks. By putting the brain in the loop, we are making passive observation into an active process in which the brain engages with the information to its own benefit. This places the brain at the center of the process of feedback, and merely provides the information necessary for the brain to effect—or to restore—improved self-regulatory competence.

A recent survey indicates that over the past six plus years between a quarter and a half million individuals have experienced infra-low frequency training around the world with our new instrument, Cygnet. We estimate that perhaps half of one percent of these have been active duty service members or veterans of our wars suffering from PTSD or TBI or substance dependency. It is reasonable to project that infra-low frequency neurofeedback will change the face of mental health, and finally make inroads into our hitherto most intractable mental disorders.

References for Infra-Low Frequency Neurofeedback

Clinical Neurofeedback: Training Brain Behavior

Siegfried Othmer, Sue Othmer, and Stella Legarda

Treatment Strategies — Pediatric Neurology and Psychiatry, 2(1):67-73 (2011)

Clinical Neurofeedback: Case Studies, Proposed Mechanism, and Implications for Pediatric Neurology Practice

Stella B. Legarda, Doreen McMahon, Siegfried Othmer, and Sue Othmer

Journal of Child Neurology,(26)8:1045-1051 (2011)

Post Traumatic Stress Disorder – The Neurofeedback Remedy

Siegfried Othmer, PhD, and Susan F. Othmer, BA

Biofeedback Magazine, Volume 37, Issue 1, pp. 24—31 (2009)

Neurofeedback treatment for pain associated with Complex Regional Pain Syndrome Type I

Mark P. Jensen Ph.D., Caroline Grierson, R.N., Veronika Tracy-Smith, Ph.D., Stacy C. Bacigalupi, M.A., Siegfried Othmer, Ph.D.

Journal of Neurotherapy, 11(1), pp 45-53 (2007)