Paradigm Paralysis, Panacea Paranoia, and Placebo Paralogism

by Siegfried Othmer | February 2nd, 2012 The field of neurofeedback has found itself subject to conflicting forces over the past decades, and it may be helpful to articulate some of the key factors that are driving our evolution as a discipline. On the one hand, we are subject to the constraints of a health care practitioner guild, and on the other we find ourselves in the much more uncertain terrain of frontier science. The demands of both are in essential conflict. The practitioner guild must represent to the world that a coherent system of practice exists, one grounded hopefully on a coherent model that is subscribed to by all of the practitioners. Guidelines and standards of practice likely follow to clarify for practitioners the choices that have been made for the sake of a credibly defensible public posture.

The field of neurofeedback has found itself subject to conflicting forces over the past decades, and it may be helpful to articulate some of the key factors that are driving our evolution as a discipline. On the one hand, we are subject to the constraints of a health care practitioner guild, and on the other we find ourselves in the much more uncertain terrain of frontier science. The demands of both are in essential conflict. The practitioner guild must represent to the world that a coherent system of practice exists, one grounded hopefully on a coherent model that is subscribed to by all of the practitioners. Guidelines and standards of practice likely follow to clarify for practitioners the choices that have been made for the sake of a credibly defensible public posture.

On the other side we have the practical realities of frontier science, acknowledging that we are just beginning to understand the very powerful tool that we have at our disposal. The original hope (which I shared at the outset) was that a simple set of protocols derived from the original Sterman/Lubar research would serve our collective purposes. These simply needed to be pushed forward into general practice, while being subject merely to some subtle refinements. Our original NeuroCybernetics system was designed with very little flexibility because we did not see a need for it. This simple world view has had to be jettisoned.

Instead what has emerged is a broad variety of methods that are based in turn on several distinct operating principles. The fundamental divide, which has been too little articulated, is whether targeting in neurofeedback is to be driven by the objective of enhancing function or whether it is to be guided by the particulars of dysfunction. At its origins, the field started out exclusively with mechanisms-based training, and all the early research soundly established its principles. It was only with the emergence of readily accessible QEEG measures that the field turned in the direction of making dysfunction, insofar as it revealed itself in the EEG, the target of training.

The uninitiated reader might at this point ask why there needed to be a divide along these lines at all. Why not simply adopt the most useful approaches from both perspectives? After all, mechanisms-based training also incorporates inhibits as a matter of course, and much QEEG-based training also incorporates standard reward-based training. One must conclude that the drive in the direction of deficit-targeting was largely politically based, in that it seemed obvious that herein lay the prospect for a “scientifically respectable” neurofeedback. Anything that took us in the direction of a more quantitative approach to targeting was intrinsically preferable—-if the issue is garnering acceptance from the scientific mainstream. But if this new approach was to meet the demands of the guild, then it had to be universally acknowledged within the field.

The resulting campaign to enforce a kind of uniformity of approach across the whole discipline was necessarily in opposition to the naturalistic emergence of new approaches out of the rich soil of clinical practice, and in the spirit of frontier science. The field is now at a place of greater diversity of approach than ever before, with a greater diversity of perspectives emerging from them. Meanwhile, those who believe that a uniformity of vision is essential to mainstream acceptance are becoming increasingly strident in their opposition, as such uniformity is slipping ineluctably from their grasp. Unsurprisingly, the actualities of frontier science are overwhelming the aspirations of the guild leadership toward uniformity of practice.

Looking back over our history, one can trace the development of this unfortunate divide to an environment defined primarily by our critics. The result is that we collectively became outward-focused and adopted defensive postures. This is inimical to frontier science, which then had to be shielded from view. The existence of the basic divide within the field between the path of enforced uniformity of practice versus the path of welcoming diversity also forced new developments under cover even within the field, where the reaction to new initiatives became increasingly toxic. Frontier science does not flourish under duress, or even under premature critical scrutiny. It needs latitude for the investigation of new initiatives.

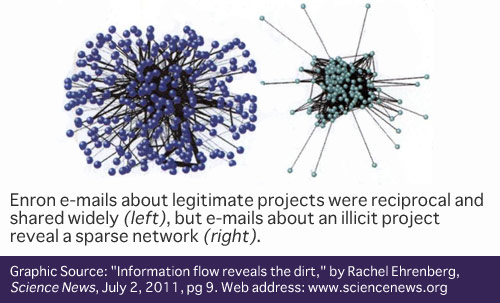

Recently the journal Science News showed a network of e-mail interchanges illustrating the typical graph for a legitimate project at Enron, and compared that to a comparable graph for one of its illicit projects. In the latter, the e-mail network was much tighter, and showed very few links outside of the in-group. A similar graph holds for the new developments in our field, but for a very different reason. In our case, connections outside of the inner core are rebuffed, and so the networks remain largely disconnected from the rest of the community. This process is well illustrated in micrography showing neurons sending tendrils out to make connections. A network quickly develops, unless the environment is even slightly toxic, at which point the tendrils withdraw.

This reflexive hostility to new ideas places significant constraints on the pace of development within the field, and to the emergence of a comprehensive model for what we do. To illustrate the centrality of information exchange, I cite the following example from our own history. We had a chance to work with an autistic child in the late eighties, and we found that our beta training was not helpful. We immediately backed off, and from that time forward put a fence around autism because we had no assurance that we could be helpful. The same happened to us with Parkinson’s.In the absence of further work with either condition, there was no reason for that posture to ever change. It took external input from our network practitioners to re-open these issues for us some years later.

Much can be gained by allowing network connectivity to establish itself among the various distinct approaches that have emerged in neurofeedback. Broadly these cover both the stimulation and the reinforcement approaches, and cover the range from near DC to high EEG frequencies. At high frequencies the most efficient approaches appeal to coherence relationships in feedback or challenge the phase at particular frequencies with stimulation. At very low frequencies we have episodic SCP-training and continuous infra-low frequency training on the reinforcement side, and tDCS and CES on the stimulation side.

To allow cross-fertilization of ideas and perhaps even some combination of approaches to emerge, we must jettison the siege mentality that still exists within the field, and the susceptibility to outside criticism that still weighs on our affairs. A look back at our history supports this view. The technology has matured despite the adverse climate, while at the same time we don’t have much to show for our effort to assuage the critics. This pattern is likely to continue, because historically critics have demonstrated amazing tenacity in the face of contrary evidence. They should simply be left alone. (“What do scientists do when a paradigm fails? They act as if nothing happened.” –Elaine Morgan.)

My first exposure to scientific dogmatism was in connection with the Kon-Tiki expedition. An anthropologist visiting us in the early fifties was simply apoplectic about Thor Heyerdahl’s effrontery in contradicting prevailing wisdom. She had not read the book about the expedition, and was not about to pollute her mind with such obvious nonsense. It has been sixty years, and we now find in the Journal Science the following: “…the idea of prehistoric contact between Polynesians and South Americans has gone mainstream.” Heyerdahl’s ghost probably slowed the acceptance of this insight rather than accelerating it, simply because it energized the critics along the way, which tilted the playing field. There are some useful analogies here to our own condition. The early scientific research in neurofeedback was thought to have been rejected (whereas in fact it was mostly just ignored); there was the “premature” popularization of alpha training to live down; and we now have the prominent involvement of non-scientists in pushing the frontier.

There are three principal reasons for the ongoing animation of the critics of neurofeedback. The most obvious is Paradigm Paralysis, the categorical assertion that neurofeedback is not possible. This position is almost impervious to data because it is belief-based, so it is best left alone. The second is Panacea Paranoia, the idea that any therapy that promises benefits across the whole domain of mental health cannot be real, and hence must be nothing more than a placebo effect. Interestingly, the only entity that is allowed to be a panacea is the placebo itself. This is merely a case of misplaced concreteness. The panacea lies in the scope of brain plasticity, which we are just beginning to explore with neurofeedback. The neurofeedback itself is a trivially simple tool to elicit and mobilize brain plasticity. It is a humble servant, and should not be assigned substantial agency. The power of the placebo is lodged in the same place. There is no substantial agency there either.

We come then, finally, to the ultimate barrier to acceptance, Placebo Paralogism. What we have here is the appearance of a sound scientific counter-hypothesis when in fact it is nothing of the kind. Whereas the drug researcher can lump non-specific effects into the placebo bin and proceed at will with a controlled design, we cannot do the same. For us this would represent a category error. The argument proceeds as follows: If the placebo model is to explain neurofeedback, then it has to explain all of it. No exceptions. If there are exceptions, then we start having a very different discussion. This means that the placebo model also has to explain the hard cases, not just the easy ones. Let us go then to some of the hard cases.

We know that most cases of minor traumatic brain injury and of PTSD recover on their own. There is also no question that such recovery can be quite non-trivial, and is not explainable in terms of obvious factors such as the subsidence of edema. If such cases had been included in a formal controlled research design, such recovery would have been labeled ‘placebo response.’ But this would have been just a label for the natural process of self-recovery, aided perhaps by some expectancy factors. We now have good evidence to believe that the mechanism of such self-recovery is the gradual re-ordering of neural network functioning. Evidence for this was adduced years ago at UCLA, where imaging data showed persistent changes in the placebo group of depressives, changes which differed from those who responded to medication.

We now also know that the operative mechanism in neurofeedback is the re-ordering of neural network relations. So we have an aspirational approach for the systematic retraining of neural network functioning that is placed in juxtaposition with spontaneous recovery mechanisms. Telling the difference in the moment is impossible, just as it is impossible to tell whether a photon emitted from an atom did so via spontaneous or stimulated emission. But we have other ways of puncturing the placebo bubble that by hypothesis contains all of neurofeedback.

The most immaculate experiment ever done in neurofeedback was that of Barry Sterman with the cats that were injected with monomethylhydrazine. This was for the best of reasons, namely that neither Sterman nor the cats were aware at the time of the grander experiment in which they were playing a role. Hence neither was in a position to put a spin on events: no placebo response on the part of the cats; and no intention on the part of the researcher to end up with divergent results. This early experiment already punctured the placebo balloon, and it did so for all time.

But there is more. Consider the survey on epilepsy research published by Sterman in the EEG Journal in 2000. Essentially all those studies involved medically refractory epileptics. Significant improvement was shown with respect to medication alone. Since all epileptic drugs had already been shown to be better than placebo, there is no question that a study of medication plus neurofeedback would be better than placebo as well. Hence there is no point in even doing the study. It is not necessary for neurofeedback to be better than anti-convulsant medication in a head-to-head comparison. It is sufficient that it be additive. That already places it in the category of an active mechanism.

The results recently published by Jonathan Walker make the same case even more strongly. Adding neurofeedback to medication for migraine had a much better effect than the medication alone. It wasn’t even close. No placebo-controlled study is needed here either. The same goes for ADHD. The studies by Rossiter and LaVaque showed neurofeedback matching the medication response, which again obviates a placebo design. The case for neurofeedback as contributing actively to a self-regulation remedy for epilepsy, migraine, and ADHD can be made on logical self-consistency arguments alone, without any additional placebo-controlled study.

One can also spike the placebo bubble with a collection of individual cases. If placebo explains all of neurofeedback, then we can also line up all of neurofeedback on the other side. Consider the following reports from neurofeedback clinicians: A therapist in Ireland reports working with a client with Multiple Personality Disorder. Whenever he changed reinforcement frequencies he would trigger a transition to another alter. A therapist in Colombia working with a person in Persistent Vegetative State reported the simultaneous emergence of a regularized sleep pattern, regularized menstrual period, and the subsidence of constipation upon the initiation of neurofeedback. A therapist in Key West reports that a woman tried neurofeedback for fifteen minutes at an open house, and came back three days later to say that her hot flashes had declined by 50%. She set up additional sessions for after her vacation. When she returned, she reported that her hot flashes had entirely disappeared in the interim.

Or consider the report on a court-referred Vietnam era veteran with schizophrenia of long standing. At the end of the first session, he reported that he did not feel like smoking any more. At session five, he said that he had not smoked since the previous session, a period of nineteen days. He had not been trying to quit. One may even conjecture that the training affected the underlying condition of schizophrenia, which allowed him to discontinue the dosing with nicotine. But time will tell.

Finally, consider the report on a woman who had been under the care of two psychiatrists for decades for her unrelenting anxiety, one managing her meds and the other doing psychotherapy. The woman was essentially housebound with her pet dog. After some number of neurofeedback sessions, she reported that her anxiety had suddenly disappeared. She had already made travel plans to see her family, an event that came off without a hitch.

The above cases make it difficult to invoke expectancy factors as explanatory. And once the part of the placebo response that is relevant to neurofeedback has been reframed as a “spontaneous” reordering of neural network functioning, the comparison of neurofeedback to placebo loses its saliency. It is neurofeedback that helps us understand the robust placebo response, not the other way around. To treat it as an adversary like a drug researcher is to shoot into our own foxhole. When it comes to deciding whether to wait for autonomous recovery or to intervene actively, there is no debate. With our modern methods we now routinely observe robust single-session effects that settle the question rather quickly as to whether neurofeedback training is going to be worthwhile.

Given this state of affairs, we should not indulge the research community in their zeal for placebo-controlled designs. Rather, we should urge the reframing of hypotheses to something that is relevant to the current state of the art. It would be particularly inappropriate for neurofeedback practitioners to be involved with placebo-controlled studies because that would mean a tacit admission that such studies are answering a question that still needs to be asked. And once the placebo spell has been broken, it must also be acknowledged that the placebo hypothesis cannot be used to dismiss the emerging technological initiatives any more than it can dispose of neurofeedback as a whole.

It is part of the maturation of our field that we should define for ourselves the terms of discussion and the research agenda going forward. Specifically, placebo-controlled studies should have no place in that schema. More of an inward focus on our own priorities would also mean acceptance of our existence on the frontier of neuroscience, and a positive embrace of the technological progeny and procedural variety that the field has produced. We have an advantage possessed by no other part of the neuroscience community. We operate with a tool of great subtlety with immediate feedback on what we do. That keeps us on track, and that makes all the difference.

Very well said! I have pointed to Sterman’s cats regarding placebo many times. It is so easily overlooked by many. Neither Barry nor his cats had expectations regarding the serendipitous results. The frontier is an undefined place. So much is happening regarding neuroplasticity revealed by neuroimaging. It is time to break out of a reactive stance to outmoded and uninformed critics who have a stake in maintaining the status quo. We will never match dollar for dollar the influence of some of the juggernauts. We must simply leave them behind.

Thanks for the inspiring read!

Bravo Siegfried! Once again you have eloquently defined the issues and their polemics using sound scientific scrutiny to prevent the premature merging of socially endorsed constructs with the biological baselines of the complex system we know as “the brain”. Is it not time we all begin to orient our paradigms of practice and applied practice to a post Post-Modern era?

Brilliant as always, Siegfried. Not an easy essay to read through, but worth the effort. It’s not that we don’t need continuing research–single case examples are paradigm busters but not explanatory. Your hypothesis is very good, that “placebo” and self-regulatory neurofeedback training have something in common, rather than neurofeedback being a subset of Placebo! What do they have in common? It’s going to take some paradigm-busting creative research, or a lot of it, to take us along the path you point: “….the operative mechanism in neurofeedback is the re-ordering of neural network relations. So we have an aspirational approach for the systematic retraining of neural network functioning that is placed in juxtaposition with spontaneous recovery mechanisms.”

We still have to be able to answer critics, hostile and sympathetic, in a way that holds the engagement and furthers respect for our accomplishments. I have in mind David Rabiner’s recent review and analysis (http://www.helpforadd.com/2012/january.htm) of a review in the Journal of Attention Disorders.

I very much enjoyed reading your thought provoking essay. I do feel, however, that you are too dismissive of the possibility and desirability of demonstrating efficacy via well controlled and blinded studies. The reliance on anecdotal evidence, even if it sounds compelling, is not particularly convincing (and I would argue this is a justifiable position to take). I know you can find similar testimonials from countless practitioners of homeopathy who could probably borrow much of your essay and apply it to their own area (just Google “homeopathy testimonials”). It is too easy for conformational bias, and wishful thinking to cloud the judgment of fallible human beings and that is what makes rigorous proof so important. Unless you believe that homeopathy is a valid medical discipline, that works, you have to admit that anecdotes do not “pop the placebo bubble.”

You seem to argue that since neurofeedback works via the same mechanism that a placebo might (re-ordering of neural network relations) that it is impossible or somehow misdirected to do placebo controlled trials. If there is a benefit to using neurofeedback (and, for my daughter’s sake, I really hope there is), then it should be possible to demonstrate that when compared to sham treatments. How can you hope to progress the field if you can’t objectively demonstrate that one treatment protocol is superior to another? Maybe through this sort of study you would be able to gain more understanding of the operative mechanisms and more rapidly develop more effective treatments.

I’ll give an example that is totally made up even though I’ll attempt to anchor it in some EEG jargon that I’ve picked up. Please look past any obvious problems with my example and focus instead on the general idea. Imagine that someone gets the idea that really low frequencies are important therapeutically and so they start using them in their training protocols. Also imagine that with lower frequency signals will give rise to a longer characteristic time for feedback to be given to the subject (because it takes longer to detect a signal). In that case the frequency of the EEG that is being responded to is confounded with the mean time between feedback signals.

If that ends up working better, maybe it is the very low frequency that is important, but what if the characteristic time of receiving feedback is the real reason for the apparent benefit? Careful studies which lead to understanding might reveal that sort of mechanism and thus lead to independent control of the feedback time frame. This would open the door to finding that the benefits of higher frequencies can them be vastly magnified by putting in a delay to a response. [Again, this is all made up and I’m not suggesting it is true]. Now, are practitioners relying on anecdotal evidence likely to come up with this sort of insight? Maybe someone could stumble upon it, but I think it is more likely to come from more rigorous studies which seek to advance the mechanistic underpinnings of this field.

You’re right: Dismissive is the word. I am determined to see to it that neurofeedback is taken seriously well before the ‘critical’ trials are done. One reason is that this is the way progress actually happens nearly all the time, whether people admit it or not. Do you suppose that Eli Lilly would have sponsored a large-scale study on Prozac if they did not have a good idea that the outcome would be favorable? There are still questions to be resolved in any such study, and sometimes such studies still fail. But these research programs are too expensive to be launched as stabs in the dark. So the studies you would like to see done will not happen until people are already convinced that neurofeedback holds promise. So that is the critical task here.

Yes, I believe that since neurofeedback and the physiologically relevant aspect of the placebo likely work by the same mechanism, it makes no sense to me to set up a careful design to discriminate between the two. They are both observable aspects of brain plasticity, and I am happy for both of them. What allows me to know that neurofeedback is not a placebo is first and foremost the parametric sensitivity: we can make migraines come and go at will; we have been able to get hand tremor to come and go at will; we can often shift state in Bipolar Disorder essentially at will. In other words, we have a measure of control over physiological state that is convincing on the question of whether neurofeedback involves an active mechanism. There is no possibility whatsoever that we would give up this level of control in favor of a placebo, so why bother to run the test?

The evidence for NF is beyond anecdotal. We have more evidence now in favor of neurofeedback in remediation of PTSD than exists for all other methods combined. These data come from a variety of independent sources. We’re not talking about statistically significant effects here; we are not even talking about large effect sizes. We are talking about people running around essentially symptom-free, people who before neurofeedback were dysfunctional. And if indeed we are talking about the reduction of symptom severity below the threshold of clinical significance, then why is any comparison study against a placebo even called for? If we can resolve the clinical issue at hand, then we are done. There is no possibility that a placebo trial will show comparable results for the placebo arm.

All that is required is for professionals to take their conceptual blinders off. Once that has occurred, the next step is for professionals to undergo the training experience on their own heads. That’s all. Even one session is usually enough—two or three at most. Essentially no one’s skepticism survives the experience of the training on their own heads. And if matters are indeed that simple, why should I set up something more complicated?

Regarding your strawman hypothesis:

Indeed formal experimental designs would be of great interest in getting at questions of mechanisms, and to permit refinement of clinical approaches. None of these would be aided by having a placebo arm, however.

Typically what would be done here are A/B designs which compare one approach with another.

As it happens, clinical practice already incorporates the A/B design, because the clinician is evaluating protocol options every step of the way, at every session, and within every session. There is an incredible advantage in doing within-subject comparisons because under these circumstances the largest number of variables is uniform between the A and B arms.

Nevertheless, more could be learned with large-scale group studies incorporating the A/B design principle.

What seems to be missing here is a recognition that in any physical science related to practical

engineering there is a professional group of researchers living in the real world and doing the

research.

IMO Psychology is not a “real” science. My word, you can still find psychoanalytic journals and

hundreds of professors teaching raw mysticism. What has been true in other professions,

that the “hard” scientists work in close cooperation with the practitioners has never occured

in psychotherapy. Indeed, our profession was poisoned by the M.D.s adoration of Freud and

the dominance of “healing” by M.D.s whose only medical act was to intoxicate patients.

It never bothered the analysts or others infected with “id and ego” nightmares that there there was no shred of evidence that psyche existed outside ancient Greek hallucinations. The whole group of them abandoned science, medicine and the body.

EEG biofeedback has had the advantage that it uses simple, physically well understood tools

applied to achieve a simple, operational goal. When a large group of well trained Ph.D. psychologists

were attracted by Sterman’s robust work, bang, as anyone who knew the slightest bit about learning

expected, the client improved her skills, emotional stability and capacity for pursuing a goal. There

always was a list of operationally defined goals, not merely for action of the meters and chart,

but for action in the real world. This so horrified MD psychoanalysts that they defined signing an

outcome based contract (the only kind recognized in the real world) as “unethical”. Florida even

had a law against it. Fortunately the law was held to be unconstitutional, but many academics still

believe that a guaranteed result is at best “tacky”. But there was a nice cluster of folk with good

instruments – “if it doesn’t work, bring it back, we’ll refund your money.” GvH

Along with our physiologically based tools we now also have physiologically based measures to complement standard outcome assessments. So one could readily offer a money-back guarantee on the basis of such physiological measures. At a minimum, such measures could resolve ambiguous cases such as those where a client claims that their ADHD has not been affected even though the CPT data documents substantial improvement. With such tools as back-up, the clinician does not have to live in fear of being sand-bagged.

With respect to the true treatment failures, it would be in everyone’s interest not to charge these people. After all, the fear of treatment failure is probably the greatest barrier at the door of the NF practitioner, since such clients have typically had long histories of prior treatment failure…Removing the fear of costly treatment failure would make an enormous difference, I would suspect.

Typically, however, failure is not absolute. Simply doing the neurofeedback can be enormously clarifying for the trainee with respect to what the issues are that need to be pursued. As Edison said, even if we failed to build a light bulb, we had not failed.