Recent Critical Studies of Neurofeedback in Application to ADHD

by Siegfried Othmer | March 5th, 2014by Siegfried Othmer, PhD

A research group in the Netherlands has recently published two neurofeedback studies that failed to corroborate the claims for EEG-informed “Theta/beta” neurofeedback in application to ADHD. The first one of these of which I became aware had relied on parent and teacher ratings to establish progress (van Dongen-Boomsma et al., 2013). Such a study is easy to critique. After all, we have learned not to rely too much on parental observations on their ADHD children in our work. Is that really the best that can be done in the context of a carefully done study? Why not rely on some hard data from neuropsychological testing, for example? Well, it turns out that that was done also, and that was reported in the second paper (Vollebregt et al., 2013). Therein lay the answer that would be much more definitive. Alas, the outcomes were not favorable either. The experimental group did not distinguish itself from the control group in any meaningful way on any of the chosen tests. The tests had been selected with specific characteristics of ADHD in mind.

A research group in the Netherlands has recently published two neurofeedback studies that failed to corroborate the claims for EEG-informed “Theta/beta” neurofeedback in application to ADHD. The first one of these of which I became aware had relied on parent and teacher ratings to establish progress (van Dongen-Boomsma et al., 2013). Such a study is easy to critique. After all, we have learned not to rely too much on parental observations on their ADHD children in our work. Is that really the best that can be done in the context of a carefully done study? Why not rely on some hard data from neuropsychological testing, for example? Well, it turns out that that was done also, and that was reported in the second paper (Vollebregt et al., 2013). Therein lay the answer that would be much more definitive. Alas, the outcomes were not favorable either. The experimental group did not distinguish itself from the control group in any meaningful way on any of the chosen tests. The tests had been selected with specific characteristics of ADHD in mind.

Still, when it comes to group studies, one wonders about the incidence of individuals who might have shown highly significant improvement that got obscured in the merger with the group data. That hypothesis too was evaluated. An instrument called the “Reliable Change Index” was utilized, and that did not uncover any great winners either.

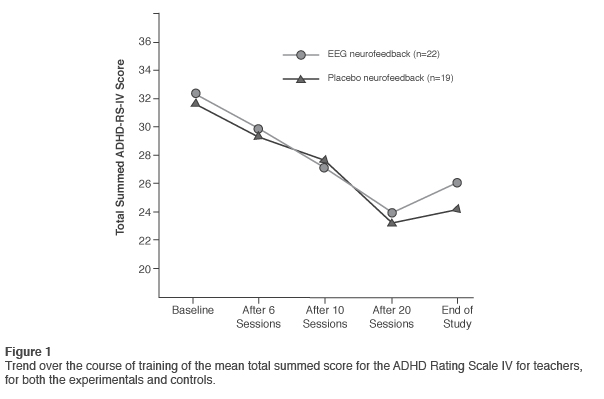

How is one to weigh these results in the context of all the other studies that have shown positive outcomes over the years? By now the efficacy of neurofeedback in application to ADHD is so thoroughly established that a single study with null results cannot shake that appraisal. Nevertheless, as these results are so contrary to expectations, they command our attention. On initial inspection, the results were rather disappointing all around. If one looks more closely, however, a more complex picture emerges. The parent and teacher ratings did actually indicate significant improvement in the behavioral measures over the course of the study. The results of trends for teacher ratings on an ADHD rating scale are reproduced in Figure 1. The sham training group did just about as well as the neurofeedback group, so there was no significant treatment interaction. This is not quite the same as a null result. It is possible, after all, that the control condition also incorporated active agency of some kind.

Something similar was seen many years ago, when Henry A. Cartozzo performed a controlled study with the NeuroCybernetics instrument for his Ph.D. dissertation under Richard Gevirtz. He used the conventional Pacman videogame as the control condition. Both groups improved on T.O.V.A® measures, with the results favoring the active neurofeedback training. But the comparison yielded no statistically significant advantage for the neurofeedback training. In that case, it was only too likely that ADHD children derived some real benefit from playing the video game. A similar outcome was observed in the feasibility study undertaken at Ohio State University (Arnold et al., 2012). This study also involved a video game-like feedback situation, and both the training and the sham groups yielded “large pre-post improvement on parent ratings.” However, results yielded no significant advantage for the training cohort.

The evidence that cognitive function can be enhanced through video game playing has been accumulating over some years (Green & Bavelier, 2006). We may judge that the neurofeedback was competing with a moving target in both cases cited above, and the improvements in both cohorts were likely quite real.

In the study at hand, it is likewise possible that the children in the control group underwent an active process. All children were tasked to maintain an “active focusing state” and to attend to the modulations of the screen brightness and to the auditory tones while watching a movie. Whereas that might not matter much under ordinary circumstances, these circumstances were not ordinary in any way. The children knew they were involved in an important study; likely they were being attended by flocks of people in white coats; they expected to find meaning in the signals they were exposed to; they were coached by the clinicians; and they were tested up one side and down the other. It may well be the case that the children engaged in a mental challenge throughout the training process.

The supposition that sham training may indeed be an active process has recently been given substantial support. False feedback (sham training) was found to increase gray matter volume in brain areas involved in intention monitoring and sensory integration (Ghaziri et al., 2013). The affected voxels were similar in the veridical and sham training groups. Volume increases occurred also in the hemisphere that was not being trained. Meanwhile, a passive control group in this study manifested no change in gray matter volume. There were statistically significant improvements in attentional measures even in the sham training group. Plainly these results contradict the assumption that sham training satisfies the requirements for a neutral control condition.

Under the unusual and artificial conditions of the study before us, and encouraged by the attending clinicians, even children getting sham feedback might have maintained connection with the task at hand. The resulting brain exercise over such an extended period of time could well account for the improvement in the control group. All the more reason, then, to look to neuropsychological tests to sort out what is being accomplished.

Results of Neuropsychological Testing

Can the neuropsychological tests help us to resolve the issue of specific efficacy of the neurofeedback protocol? Here too it may be useful to reflect back on history in order to calibrate our expectations. Back in 1993 our company, EEG Spectrum, sponsored a controlled study of our protocol in application to ADHD at a local university, Cal Poly Pomona. In order to kill two birds with one stone, a cognitive training group was also included in the study along with a wait-list group that would get delayed neurofeedback. For the sake of properly evaluating the cognitive skills training, a variety of cognitive tests were included in the battery.

The results were disappointingly modest, it turns out. They were particularly modest in the area of the cognitive skills testing—for both of the active arms of the study. This was in startling contrast to what we had come to expect from our own clinical work, and yet we had been in charge of all of the EEG training in this study at our Pasadena office. What could explain these bland results? It turns out that some 85% of the children being trained were already medicated for ADHD! And 15% of them were on more than one medication. The referrals for the study had come from pediatricians in the area, and they had sent those children whose problems were not being solved by the meds. It is fair to observe that the medications had already plucked the low-hanging fruit in these cases when it came to the behavioral measures. Moreover, by the terms of the study there was no communication from the clinician back to the prescribing physician with respect to re-titration of the medication as training progressed.

But what about the cognitive skills testing? Stimulant medication has not been shown to improve cognitive function systematically. Here we were up against a different issue: The children had not been selected for deficits on the chosen tests! They were selected on the basis of having ADHD-related issues for which parents were still seeking help. So whereas these children were likely to reveal a deficit here or there on the cognitive skill testing, they were unlikely to do poorly across the board. This was not like a study of depression, where everyone admitted into the study is actually depressed. This shortcoming applies also to the study before us presently, and that cautions us not to over-interpret the negative statistical results even of the neuropsychological testing.

At issue here is a more general problem. Even in traumatic brain injury (TBI), which is unambiguously associated with a variety of cognitive deficits, a recent study found no consistent pattern of dysfunction. Most of the selected tests of cognitive function failed to discriminate TBI from normals at a group level (Bonnelle et al., 2013). The same has been found for fibromyalgia as well (Natelson, 2013). It is well-known that subjective reports of cognitive deficits in fibromyalgia often cannot be corroborated in testing.

Reaction of the Neurofeedback Camp

The initial reaction of the neurofeedback camp was one of alarm about the impact these studies might have, just when the larger community of health professionals is starting to get interested in the field. The ISNR (International Society for Neurofeedback and Research) sought to identify shortcomings in the experimental design and thus cast doubt on the findings. First, attention was drawn to the fact that the study group included children who had been identified with Oppositional Defiant Disorder, anxiety, or specific learning disabilities (16/41). Exclusions based on comorbidities such as these are not typically made in clinical work, but scientific evaluation generally attempts a higher standard. Could this account for the negative findings? We and others have found that the presence of such comorbidities is not a barrier to success in remediation of the attentional and behavioral deficits of ADHD. Moreover, the same protocols can be effective with these comorbidities as well.

The ISNR response also draws a bead on maintaining the reward incidence at 80%, on the basis that this requires little effort to accomplish. And yet the use of auto-thresholding at a nominally 75-80% level has been commonplace in clinical work for a couple of decades by now. It is a stretch to indict a research design for adopting what has become common practice within the field and has served us nicely. More fundamentally, there is no evidence that making an effort of any kind is the pathway to success in neurofeedback. This falls more into the realm of the standard mythology of the field, one of its many unexamined assumptions.

Additionally, a question was raised about the rationale underlying the personalized protocols used with each of the children. It is difficult to know how to read this, since this is a general problem with EEG-informed protocols. Choices have to be made about priorities in targeting, and that usually involves multiple criteria that are associated with clinical symptoms and behavioral traits. These have not been standardized. In any event, training sites included not only the standard C3 and C4 but also P3 and P4 as well as F3, F4, and Fz. These choices were likely based on several criteria, as implied in the paper. That does create an ambiguity for purposes of replication, but it does not pose a problem for the study itself. One might quarrel with particular choices if we were confronted with adverse outcomes, but that wasn’t the problem here.

Finally, the work was criticized on the basis that task acquisition in the course of the training had not been demonstrated (learning curves were not shown). The concern here must relate to the theta band, since it is already known that SMR and beta1 amplitudes do not systematically track the protocol. Theta reduction was the earliest reliable EEG change documented across independent laboratories (Lubar, 1995). That was the primary reason for the adoption of the theta/beta ratio criterion by Lubar originally. Indeed, then, a significant change in theta band magnitude is expected, particularly when high values of theta amplitudes, or of theta/beta ratio, are selected at the outset.

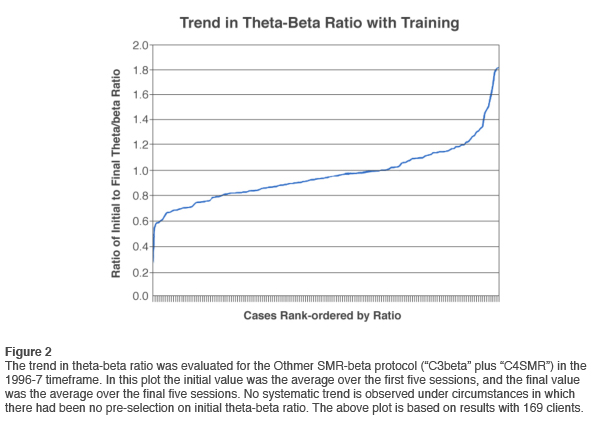

Even so, the existence of a significant training effect on theta amplitudes at the group level does not imply such predictability at the individual level that it would allow discriminating responders from non-responders systematically. David Kaiser evaluated theta-beta ratio trends at a time in our program when we had settled on the combination of beta1 training on the left sensorimotor strip and SMR training on the right, a protocol that came to be known as “C3beta/C4SMR.” The results are shown in Figure 2, and these reveal no systematic trend at all. In fact, more trainees increased their theta-beta ratio than decreased it. The vast majority did not change very much at all in theta-beta ratio. A salient difference between our work and Lubar’s was that we did no pre-selection for high theta amplitudes or high theta/beta ratios. Once such pre-selection is performed, the problem of regression to the mean arises. In the study before us, the selection criterion was 1.5 standard deviation, i.e. the 7th percentile. Both of these factors militate against the use of downward trend in theta/beta ratio as an index of training effectiveness.

The above considerations reveal just how challenging it is to devise a placebo-based research design that is definitive with respect to the core claims for neurofeedback in application to ADHD. The goal of an unimpeachable design does not appear to have been accomplished in this case, so the interpretation of the results remains somewhat ambiguous. That holds true even if we consider the two studies entirely on their own terms, as we have done up to now. Further questions can be raised if the studies are regarded in an even larger perspective.

Grasping the Nettle

If we allow ourselves to step out of the set of assumptions that underlie the studies before us, one might well ask why this particular approach to protocol specification got all the attention, particularly given the current thrust toward more individualized training. It is true that the design reflects what clinicians have been taught over the years with regard to EEG-guided band normalization training: just dial in the mandated protocol and run sessions. Giving primacy to EEG measures without regard to the value of an EEG parameter in identifying behavioral traits necessarily eclipses other clinical considerations. We have been opposed to the promotion of such a formulaic approach to neurofeedback since its beginning more than two decades ago. We refer to it as “faith-based neurofeedback.” A narrowly prescriptive approach renders superfluous the extensive education, training, and experience of clinicians in a kind of race to the bottom. Clinical skill is not deemed to be much of a factor in such a procedure.

A confluence of interests has led us to this point. The research agenda demands a manualizable protocol, and then clinicians are hectored to hew to the findings of research. The result is a forced march into a clinical approach that may be much more strait-jacketed than clinical realities call for. Unsurprisingly, academics buy into the assumption that clinical skill is either irrelevant or a confound, and thus regard themselves as the appropriately ‘disinterested’ arbiters of whether a therapy actually works. The fact is, however, that academic studies on neurofeedback have had a terrible track record going back to the early days of alpha training. The dearth of extensive prior clinical experience is surely a factor here.

MDs are required to undergo residency, and psychologists are required to undergo 3,000 hours of supervised psychotherapy prior to licensure. Yet ostensibly any graduate student can run a neurofeedback study with no prior experience at all. Modest results of such studies should not be surprising. Clinical skill cannot be dismissed as a non-specific factor in the training. Rather, it takes clinical skill to elicit the specific effects of neurofeedback. This is therapy at its best. It is not manualizable, and for that reason the disinterested academic is not the proper arbiter of whether neurofeedback works.

In the larger scheme, any formal study with null results will have negative fallout for our field, and that is indeed regrettable. Those who are just forming their opinion about neurofeedback will see these studies as a wholesale indictment of the field, and we will all suffer the repercussions. But in fact the scope of these two studies is quite circumscribed. Only a specific approach to neurofeedback is implicated, and the findings have exceedingly limited generalizability. But even protocols we consider non-ideal or inappropriate often yield remarkable results. The ‘system response’ is not that highly predictable in the individual case. It seems hard to believe that one could line up twenty-two youngsters, give them extensive EEG training, and have so little to show for it if competent training is being done. After all, the field first established itself with protocols such as these. How is one to explain such a disconnect? Once again it helps to draw on our collective history.

A modest overall result in the studies before us should not have come as a surprise. The contention between standard EEG-based and protocol-based training was largely settled long ago, at least with respect to work with the ADHD spectrum. Let’s review what transpired. At the outset, standard QEEG-based training meant band-amplitude normalization training, exactly what was at issue in this study. Protocol-based training referred to more standard placements derived from Sterman’s and Lubar’s early research. The QEEG-based training was realized early on in two commercial devices, the Autogenics and the Lexicor. Band amplitudes were tracked and trained with FFT-based methods.

The protocol-based approach was carried forward mainly with our NeuroCybernetics instrument during those years. In this case, the signal was processed with digital filters rather than FFTs, giving a more dynamic feedback response. We made the case from the outset that the more dynamic training made possible by digital filtering was preferable, given the dynamics prevailing in the SMR/beta range. In time that led to the increasing commercial success of the NeuroCybernetics system. Meanwhile, the Lexicor was withdrawn from the neurofeedback market after a few years, and the Autogenics disappeared as well. Outcomes were just not as good as with the NeuroCybernetics, which offered more dynamic training and far better visualization of the signal. This issue was extensively explored at the time.

David Kaiser evaluated at length whether the dimensional presentations of the data in feedback made a difference in outcomes. The conventional two-dimensional data representation was compared with a three-dimensional one in which data were shown receding into the background with time. Those games that provided 3D representation of the underlying dynamics most faithfully (Highways and Islands) also yielded the best results. Clearly the brain was picking up on the dynamics of the band activity. At a minimum, that was helpful on the issue of engagement. I judge from the description that the feedback used in the present studies was governed more by threshold crossings than by the ongoing dynamics. That could help to account for the ambiguous results.

In the studies before us, researchers faced the issue of whether to do SMR or beta reinforcement in a particular case. They used both QEEG criteria and considerations of under-arousal and over-arousal. This too has an interesting history. In the early days of EEG-specified protocols, it became clear fairly quickly that whereas the EEG could indeed be helpful with the inhibit strategy, it wasn’t a good guide to the reward strategy. It was quickly abandoned in that role even by proponents of a QEEG-based training strategy. This has never been a good way to choose between SMR and beta reinforcement.

As for the arousal model, we proposed this originally as a basis for understanding the differential response to SMR and beta training in our approach. At the time neither Lubar nor Sterman used the term arousal in their descriptions of the process. The original under-arousal model of ADHD by Satterfield was no longer in vogue, and the focus was now on specificity (e.g., motoric excitability) rather than on global variables such as arousal.

We also made serious efforts over those years to see whether beta training was preferentially beneficial to remediate inattention, and SMR training more applicable to the impulsivity aspect of ADHD. Tamsen Thorpe devoted her dissertation research to this issue, and David Kaiser subjected additional reams of data to such analyses. No systematic relationship could ever be nailed down at that time.

The arousal model fared only slightly better. In a given individual, training at higher frequency would tend to be more activating, whereas training at lower frequencies tended to be more calming. But there was never a clear relationship between frequency and arousal level. EEG reward frequency was not going to be a calibration tool, a meter stick if you will, for arousal level. Arousal was still viable as a concept, but it remained as unquantifiable as ever. We could talk about it only in relative terms, never absolute. The far more systematic relationship that emerged was the connection of reward frequency with laterality. The right hemisphere was always trained at the lower of the two bands in the steady state.

And then it was observed that if the ‘correct’ frequency was trained, then arousal regulation improved irrespective of whether the starting point had been characterized as under-aroused or over-aroused. And ADHD symptoms responded irrespective of whether inattention or impulsivity dominated. The optimization model developed around the concept that we were really targeting disregulation per se, not the specific manifestation of under- or over-arousal, inattention or impulsivity. But in teasing out this model, laterality had to be taken into account. The right and left hemispheres were subject to different failure modes. Each hemisphere needed to be trained at its own optimal response frequency. Since both hemispheres contributed to the clinical syndrome of ADHD, it follows that two protocols are always needed rather than just one whenever lateralized protocols are invoked.

The Bigger Picture

Over the past twenty years the field of neurofeedback has witnessed a grand experiment of nature. On the one hand, we have had the official, standard model of ‘scientific neurofeedback’ since the early nineties, and on the other we have been working in a less constrained, open-ended clinical approach where the search is ever on to find what works with a particular client, guided by considerations of functional neuro-anatomy and EEG spectral properties. While researchers posited that only formal research had adequate controls and rigorous truth tests to establish the claims for neurofeedback, scientist/practitioners relied on informed, skillful observation to sort out what worked from what didn’t in A/B within-subject comparisons, one client at a time.

The studies now before us could just as well have been performed twenty years earlier, revealing a stasis in thinking that is plainly uncharacteristic of a technology at the scientific frontier. But the strategy has been purposeful throughout: it was to establish a gold standard from the outset. Stasis has been a deliberate choice all along.

On the other side of the divide, we are now training nearly every one of our clients with a capability that has existed in its present form for less than two years, and based on a clinical method that has existed for only seven years. This continues an arc of innovation from the early days to the present—and that just covers our part in a panoply of innovation within the field at large.

Over all this time, a number of scientists denounced all of the new findings that were emerging from the less structured clinical milieu. The closed-mindedness was legendary—and very persistent. First there was the campaign against the re-emergence of alpha-training with Eugene Peniston’s work. In that same time frame, our reports of recovery from depression with a beta training protocol were not well received. Our reports with respect to the elimination of PMS symptoms were treated poorly. The same held for our reports with respect to migraine. Our reports of getting quick responses in the training were simply not credited. Indignation greeted our reports that we were working well with very young children. Further objections were raised when we reported on successful recovery from suicidality in connection with Bipolar Disorder in 1995. Later came the initial successes with the autism spectrum, Parkinson’s, and Developmental Trauma (Reactive Attachment Disorder) from within our practitioner network.

Our methods were questioned as well—in all their particulars. The use of bipolar montage was vigorously challenged, in complete disregard of the fact that Sterman’s seminal published work had been based on bipolar placements. Neurofeedback was being wedded to the localization hypothesis of neuropsychology, in considerable contrast to the network models of the present day.

Matters got even worse when we adopted inter-hemispheric training for the enhancement of brain stability. On what theoretical basis would one choose to do that? And the roof really caved in when we took the method to lower frequencies, including the theta band, the delta band, and lower. The questioning of infra-low frequency training continues to this day within the ISNR community—some seven years into the project!

It is clear by now that the critics have been wrong at every turn, and that has been true for more than twenty years. If the domain of stimulation-based technologies is included in this discussion, then it becomes unambiguously clear that the brain can respond profoundly to even brief stimulations or reinforcements. It can do so over a wide range of frequencies, and in a manner that is not diagnostically specific. Indeed rapid responding is also observed with coherence-based training. So even within the domain of EEG-specified training, matters have moved well beyond the original model of band-normalization training. Pulling all this all together, it is apparent that there are many pathways to enhancing self-regulatory capacity. This leaves no place for narrow prescriptions.

A Resolution

The defensive ISNR response to the publication by van Dongen-Boomsma, et al. shows that it goes to the core of what is still being claimed to this day. There is great irony here. The rejoinder to our various clinical reports over the years has always been that they were not the outcome of formal studies. We would respond that our track record suffices to establish practice-based evidence as a valid pathway for establishing clinical effectiveness. Formal group studies with independent controls are not needed. Knowledge can be firmed up in series rather than in parallel, based on the principles of Bayesian inference. We would argue further that the same goes for the other innovative technologies such as LENS, pROSHI, NeurOptimal, and NeuroField, none of which reached their current status through formal studies.

If academic studies are held to be the decisive standard, however, then one cannot discount adverse findings. Now that we have two mutually confirming formal studies that fail to support the band amplitude normalization approach to ADHD, the implications are being strenuously resisted. These adverse outcomes should instead compel a sober reappraisal of what has been put forward as the preferred, “scientific” approach to protocol selection. The field needs to move beyond faith-based to evidence-based methods of neurofeedback—specifically, practice-based evidence.

The claim that EEG deviations define protocol exclusively needs to be taken off life-support. It follows that doing a full EEG assessment before undertaking training cannot be seen as obligatory, especially when analysis of the EEG is poorly understood by many so-called experts. Unpinning the ISNR from the doctrine that inspired these studies will finally allow the creation of the village square that this field has needed. If that occurs, then the publication of these papers will have had a positive fallout after all. Contrast that with a contrary scenario: Suppose these studies had shown strongly positive outcomes. They would have helped to consolidate the case for the monoculture model of neurofeedback, to the long-term detriment of the field.

In the short run, we will have to endure the blows that come our way from the critics who will celebrate this adverse report. In the long run, however, these researchers will have done us a great service. This is the kind of issue that can only be resolved with finality through formal studies. Those who favor this kind of neurofeedback should heed the message rather than try to escape its implications: The protocol schema evaluated in these studies has not been state of the art for twenty years.

Summary and Conclusion

Two studies conducted on the same group of participants both tend to confirm the null hypothesis when considered on their own terms. More than likely, however, the control condition was not neutral, but rather also led to symptom improvement by virtue of induced physiological change. The results of neuropsychological testing likely suffered from the lack of homogeneity in the sample, in that it did not restrict its attentions to those who were in initial deficit with respect to the variables under test.

The fact remains, however, that the study did not reveal robust positive effects of the neurofeedback, as other such studies and even a meta-analysis have led us to expect, not to mention our own early clinical work over the course of many years. This testifies to the intrinsic weakness of the chosen protocol. The results of this study are totally out of line with expectations based on more modern methods. These utilize bipolar placement or other types of coherence-training to address network relations rather than single-site properties, and they typically incorporate pre-frontal and parietal placements in targeting.

The studies before us do help to round out the historical record, reflecting as they do an earlier period in the developmental history of the field, but they bear little relevance to the current state of affairs in neurofeedback.

References

Bonnelle, V., Hama, T.E., Leecha, R., Kinnunenc, K.M., Mehtad, M.A., Greenwoode, R.J., & Sharpa, D.J. (2013). Salience network integrity predicts default mode network function after traumatic brain injury, www.pnas.org/cgi/doi/10.1073/pnas.1113455109

Cartozzo, H.A., Jacobs, D., Gevirtz, R.N. (1995). EEG Biofeedback and the Remediation of ADHD symptomatology: a controlled treatment outcome study. Presented at the Annual Conference of the Association for Applied Psychophysiology and Biofeedback, Cincinnati, Ohio, March.

Lubar, J. F. (1995). Neurofeedback treatment of attention-deficit disorders. In M. S. Schwartz (Ed.), Biofeedback: A practitioner’s guide (2nd ed, 493-522), New York: Guilford Press.

Ghaziri, J., Tucholka, A., Larue, V., Blanchette-Sylvestre, M., Reyburn, G., Gilbert, G., Lévesque, J., & Beauregard, M. (2013). Neurofeedback training induces changes in white and gray matter. Clinical EEG and Neuroscience, 44, 265-72.

Green, C.S. & Bavelier, D. (2006). The Cognitive Neuroscience of Video Games.

http://isites.harvard.edu/fs/docs/icb.topic951141.files/cognitiveNeuroOfVideoGames-greenBavelier.pdf

Natelson, B.H. (2013). Brain dysfunction as one cause of CFS symptoms including difficulty with attention and concentration. Frontiers in Physiology, 4, pp 1-4.

van Dongen-Boomsma, M., Vollebregt, M.A., Slaats-Willemse, D. & Buitelaar, J.K. (2013) “A Randomized Placebo-Controlled Trial of Electroencephalographic (EEG) Neurofeedback in Children With Attention-Deficit/Hyperactivity Disorder.” Focus on Childhood and Adolescent Mental Health. J Clin Psychiatry, 74 (8): 821 — 827 (2013).

Vollebregt, M.A., van Dongen-Boomsma, M., Jan, K., Buitelaar, J.K. & Slaats-Willemse, D. “Does EEG-neurofeedback improve neurocognitive functioning in children with attention-deficit/hyperactivity disorder? A systematic review and a double-blind placebo-controlled study.” Journal of Child Psychology and Psychiatry. doi:10.1111/jcpp.12143 (Draft available ahead of Print)

On careful analysis the studies were poorly designed and executed in the way treatment was delivered. Auto thresholding violates the rules of operant conditioning and makes the pt vulnerable to null results or side effects and should be given wide birth by a careful clinician. These studies have fatal design flaws in them too numerous to go into here.

The ISNR critique of the study was a communal effort, and presumably raised the most obvious criticisms of the study. These I have addressed. If you have others that go to the heart of the matter, I would be happy to address them.

What surprises me in what you write is the certainty with which you make pronouncements.

Your reaction to auto-thresholding is classic: It ‘violates the rules’ as you understand them.

Data not required. Why should the brain obey the rules made up by people who weren’t taking the brain into account at all? B.F. Skinner said as late as 1974 that it was a mistake to look to the brain for an understanding of human behavior.

We have been using auto-thresholding for over twenty years in all of our designs.

On the NeuroCybernetics the threshold was resettable with a button push to maintain constant level of difficulty. That worked fine. The same capability was introduced into BrainMaster. Eventually we went with complete auto-thresholding with BioExplorer, and that is what we currently have on Cygnet. It obviously works, and it works well.

Your response is a case in point of my observation that critics in the neurofeedback field have consistently rested on their theories to discount confounding data. That has almost always been a mistake in the history of science.

Siegfried Othmer

Regardless of the results of this study, I applaud those who make those studies happen. We should research this field more to actually come up with an innovative solution to such a common problem. Hope this field will get more attention .

Indeed the academic interest that is now developing is to be welcomed. However, let’s understand what is really happening here. You speak of coming up with innovative solutions, but that’s not what is going on here. The research is retrospective, trying to prove formally what has already been introduced into practice. It is “Re-search”, not “search”.

The search was done many years ago in this case, and the people who carried it on, such as Barry Sterman, Joel Lubar, and Niels Birbaumer, were badly maligned for doing so by fellow academics at the time. It is they who should be applauded in first instance. They put their professional reputations at stake; it is they who made this field into ‘safe ground’ for present-day academics to enter without fear for their reputations.

What transpired between then (40 years ago) and now has been a bloom of innovation that was entirely the doing of scientist/practitioners operating in the clinical realm. These are the true innovators in this field, but that is very difficult for academics to acknowledge. So the innovation that has occurred is largely ignored, and as a result research that is undertaken has a kind of Rip-van-Winkle air about it. It is rooted in the distant past. Praise it if you like, but it will soon be an irrelevance.

Good to see some work not supporting that protocol. I have never been in favor of it. My own work indicates

that Alpha abnormalities are better indicators of both ADHD and Anxiety problems. I have found a biomarker for PTSD that is also in the Alpha band.

Also, like the results of your RT analysis. I have been using the presence of non-Gaussian “bumps” in the tail of the RT distribution to predict the presence of electrographic seizures. In fact I was going to present here locally on

“Chronometric Correlates of Electrographic Seizures at the Annual Meeting of the Missouri Department of Mental Health. They rejected my offering so I may talk about it some place else sometime this year. Getting around to be able to present on the subject needed a good recording of an electrographic seizure, which I finally achieved.

I would estimate that the majority of psychologists and other NFB researchers have NO strong statistical training in Bayesian inference methods. I found this interesting statement on Wikipedia.

” Despite growth of Bayesian research, most undergraduate teaching is still based on frequentist statistics. Nonetheless, Bayesian methods are widely accepted and used, such as for example in the field of machine learning.”

It makes perfect sense that a control group would derive some benefit from sham feedback. In an “ideal” controlled study, a control group would experience all of the same preparation and instruction as the experimental group. The only difference is the veracity of the actual feedback. A person will get some benefit from merely: relaxing their muscles, quieting their mind and focusing on the task in front of them. The interactive metronome is a perfect example. The EEG is not involved in the feedback transaction at all. But the exercise of attending to the audio signal improves attention. In another example, think of someone doing weight training. If the weight is too great and the iron never lifts off the rack, the person will still get some health benefits from merely trying.