The Connectivity Conference

by Siegfried Othmer | August 4th, 2005The Connectivity Conference in Armonk, New York, brought together a number of people engaged in synchrony and coherence training or the corresponding analysis. Speakers were brought together by invitation from Michael Gismondi, organizer of the conference. As a user of a variety of neurofeedback instruments, he realized that a focus on coherence training would be very timely.

Joe Horvat kicked things off with a review of his approach to coherence training. The original point of departure was furnished by Thatcher, who was probably the first to highlight coherence deviations as a hallmark of brain injury. And on the practical side, Bob Crago may have been one of the first practitioners to actually use coherence training. Joe had started out first using protocol-based training based on Ayers and Othmer, and at some point introduced coherence training with a treatment-resistant child of six. The coherence standard score went from +3.7 to —2.64 Z-score in 3 sessions! Whereas some symptoms resolved with this new training, other symptoms returned, quite possibly the result of the “overshoot” in coherence. The rapidity of change so panicked Joe that he did not go back to coherence training for a number of years.

Joe found that one way to tame coherence training is to put it up front, ahead of the amplitude-based or protocol-based training. Then the effects are more measured and gradual. When it is done later in the course of training, the response tends to be more rapid and less manageable. This observation actually stands as a nice back-handed confirmation that protocol-based training does have a general effect on the flexibility of brain functional organization.

Joe found two principal subtypes of deviations in his work (which was mainly with traumatic brain injury). In the first, elevated coherence measures tended to be associated with diminished phase measures. This is what one would expect. The phase measure here is a time average of the relative phase between the two sites. When coherence between the two sites is high, then the relative phase is more constrained to stable values, and will therefore show less variation over time. Under circumstances such as these, Joe was always comfortable training coherence. And when the coherence normalizes, the phase measure will tend to normalize as well. Joe never found it useful to train the phase measure directly. No surprise there.

The second major category is the complement of the above, namely diminished coherence and elevated phase. When there is insufficient linkage between the sites, then of course the relative phase will be more random. In this case, coherence up-training is brought to bear, and again an attempt to train down mean relative phase directly is not found to be helpful.

Joe gave an example of training a child with Reactive Attachment Disorder in which there were apparently effects of over-training. As it happens, this was a child with virtually no deviations at all on the brain map, but a follow-up brain map after protocol-based training showed insufficient coherence in delta. An attempt at coherence normalization training led to an initial improvement in symptoms and then a worsening. Joe has never done coherence up-training in the delta band since. Now at one level this is very much the way we work as well, responding to adverse consequences that follow the training, and making the generous assumption that the training probably had something to do with whatever we find, good or bad. But in this case, one may wonder. Reactive Attachment Disorder children remain notoriously volatile until the training is well along, and this event may in fact have been a case of “post hoc, ergo propter hoc” that George loves to go on and on about. On the other hand, experiencing a raging, tantruming child after a neurofeedback session is enough to put anyone off their feed.

The natural hierarchy for coherence training that Joe has adopted is to target coherence anomalies first in the delta band, secondly in the theta band, and thirdly in the beta band. If linkages show both excesses and deficits in the beta band he will tackle the excesses first. Alpha anomalies are usually found to resolve when the others do, and don’t need to be targeted specifically. When confronted with both long and short linkages that are deviant, he will tackle the long links first, finding them harder to train. And when that is accomplished, the shorter links often resolve without explicit targeting. The coherence training is undertaken as a first objective unless the person exhibits sleep issues or PTSD. In the case of sleep disregulation, standard protocol-based training is employed, and in the case of PTSD Joe falls back on his EMDR experience and work with desensitization techniques. In that case, another map will be done before neurofeedback is begun. The difference in the maps demonstrates that the EMDR treatment alone is also capable of altering brain maps significantly. When all is said and done, Joe declares his basic objective for the training: “I am training to clear a map…” On the other hand, Joe acknowledges that in the process of clearing coherence anomalies, inter-hemispheric asymmetries may in fact get worse, or even show up where they weren’t present before. There is no perfect world.

Originally only Lexicor provided for coherence training. The NeuroCybernetics approximated coherence work by providing for training on the sum of channels to either favor or disfavor synchrony between the channels with up- and down-training, respectively. Somewhat apologetically we originally referred to this as “poor man’s coherence training.” Some wags then responded that given the price of the NeuroCybernetics it should really have been called rich man’s coherence training…

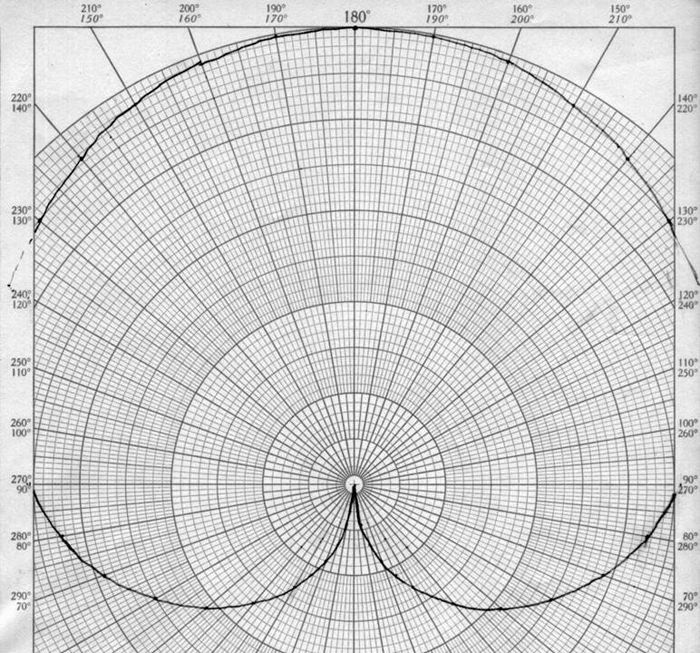

Our intent was to introduce some honesty into the discussion. Synchrony training is not the same as coherence training, although synchrony is actually not a bad stalking horse for coherence. We know that in principle two sites can have a persistent phase difference between them and yet be coherent. But work by Bill Hudspeth has shown that that has practical limits when it comes to the brain. When the equivalent time delay between sites (i.e. the phase difference expressed in units of time) is greater than some ten milliseconds, the coherence between sites tends never to be very large as a practical matter. We also know that the sum of channels has only a very gentle dependence on relative phase over a very large range in phase angle. This is shown in Figure 1 below. As a result, for purposes of brain-training the detection of large-amplitude excursions in synchrony is nearly the equivalent of detection of excursions in coherence.

There was a lot of discussion about how coherence was originally calculated in the Lexicor, a matter that could not be resolved because Lexicor has never disclosed its formulation. But in fact it hardly matters at all. Coherence training in the moment only needs to discriminate between coherence increasing and coherence decreasing. We are just engaged here in a sophisticated game of warmer/colder. As long as the measure we employ replicates the actual coherence in this simple respect, it can be used for training purposes. Synchrony training serves in that role, and so does whatever calculation was performed by Lexicor. When it comes to determining the actual coherence between sites, a longer-term record is needed, and reference needs to be made back to a database, where presumably the rigorously correct calculation of coherence is being made.

These requirements are totally at odds with what is required for good training. When it comes to training, we require neither accuracy in coherence nor even precision. We need to know the rate of change of coherence, and in that regard it is even sufficient just to know its sign. What does matter is that we have this information promptly, and with respect to the then-ongoing activity in the brain. There is simply no way that the rapidly-changing information we require for feedback can give us an “accurate” measure of coherence, and there is no way that an “accurate” measure of coherence can give us an indication of how to train the brain in a particular moment. We must approach each of these tasks somewhat independently.

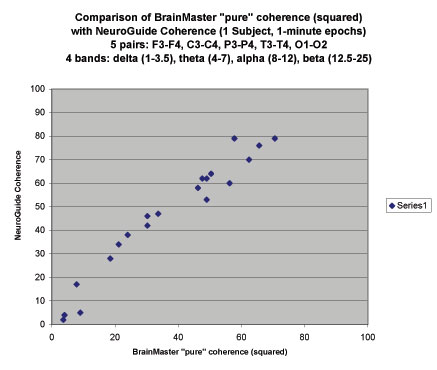

With the discussion of the Lexicor methodology still hanging in the air, Tom Collura presented an elegant way in which coherence could be calculated readily with minimal delays. A time average of that quantity could then yield the appropriate measure of coherence that should line up with the databases. As it happens, Tom verified that even during the conference, having taken some measures off Joe Horvat’s scalp and comparing his own coherence measure on the BrainMaster with that of Bob Thatcher’s Neuroguide. The result is shown in Figure 2.

Once this essential correspondence has been established, it is clear first of all that a single solution has been devised both for the immediate requirements of real-time training and for the calculation of the “stationary” value of coherence between those two sites at the prevailing frequency. Secondly, it is now possible to work day-to-day without always referring back to the databases. This makes it practical to work with even a rapidly-responding measure such as coherence without groping in the dark and holding one’s breath with regard to possible over-training. In fact, now that Joe Horvat has the BrainMaster underfoot he has been liberated from the policy of training coherence first. He also uses the mini-Q to track changes between full brain maps.

Jonathan Walker recalled his own path into this field. He decided to go into neurology because there were so many unsolved problems. He was convinced drugs were going to be the answer, but over time found that drugs almost never heal things, even though they may enhance function. They do not get at the heart of problems. At one point a QEEG machine was purchased and no one knew how to use it, so he ended up getting involved. It was used only for discriminant analysis, as for example in distinguishing between depression and dementia, or between Bipolar Disorder and depression. Ominously, the people who did the work ended up not being liked by the people who didn’t do the work… At some point he came to hear about Joel Lubar’s work from a neuropsychologist interested in dyslexia. Jon found that although it was easy enough to identify elevations in theta/beta ratio in many ADHD children, this measure did not define who was actually responsive to “theta/beta training.” The latter group was much larger. So for these purposes, Walker actually abandoned the QEEG, and still obtained a clinical success rate on the order of 80%. When he later re-introduced the QEEG, his success went to some 95%. This is how he ended up in the “QEEG camp.”

There was a second motivation, namely his abiding interest in dyslexia. “There was no dyslexia section in the [protocol] handbook.…” A patient could not come with only dyslexia and be systematically trained successfully. It was at this point that Jon Walker encountered the early work of Joe Horvat on coherence training. Joe had had success with learning disabilities induced through traumatic brain injury. The new coherence training was helpful for LD and epilepsy, but still didn’t do much for ADHD, where the traditional approaches largely sufficed. What emerged was a combination of power-based and coherence-based training to address both the more general sub-cortical disregulations and the more cortical features. Jon thought increasingly in terms of cortical “modules” in which certain functions were expressed through particular linkages, and in time all such linkages would be fully characterized. In the meantime, some worthwhile conjectures could serve as preliminary guides to training for specific functions.

Striking results with certain patients motivated a continuing exploration along these lines. For example, one patient with only coherence anomalies significantly increased his batting average and became a sharpshooter after coherence-based training. The coherence training was also faster. Jon found it quite common to train to a power anomaly and not see changes over five to ten sessions. It is very unusual to see that happen with coherence.

This reminds me of a point that Jay Gunkelman had made about the mu rhythm. It turns out not to be so effective to target it directly, but other kinds of training can cause the mu rhythm to desynchronize. Also, we have been told of many occasions in which our kind of inter-hemispheric training brings about a substantial reduction in both high and low frequency amplitude elevations once the “right” reward frequency has been found. An equivalent amount of time spent with “inhibit-based training” would not have had that effect. In actual practice, of course, the inhibits are engaged all the time. Clearly it is the reward that is making the difference.

Amplitude elevations are likely to be signatures of disregulation, but they may not indicate the most propitious target for training. Jon addresses the most extreme coherence abnormalities seen in beta, alpha, theta, and delta, and then repeats the Q. For learning disabilities, it seems to be most important to get the beta band right. Jon finds deficits in coherence, particularly in beta, to be more resistant to training, taking perhaps twice the time at ten sessions. Jon focuses first on the intra-hemispheric deviations, then the inter-hemispheric, whereas Joe Horvat concerns himself mainly with the intra-hemispheric anomalies.

Jon has retained his interest in protocol-based training, and was recently tempted to try the inter-hemispheric bipolar training with a suicidal bipolar patient. The training pretty much got rid of the depression, but there was still some anger and irritability, and a post-training Q showed the emergence of some hypo-coherent anomalies. This is of great interest, since we don’t typically have such measurements available on our inter-hemispheric training. Jon suspected the fellow may have been over-trained, but we tend not to see over-training effects with this protocol.

With regard to dyslexia, Jon tried activating T3 in response to a finding by Shaywitz from imaging work. Dyslexics may not activate T3 appropriately when reading, but training to activate it does not solve the problem. This is an object lesson in the network relations of something so complicated as reading. Obviously all of the modules involved have to be part of the dance. It’s not just about activation, but rather about functional integration, which is ultimately a matter of timing. Jim Evans showed back in 1995 that dyslexics can have a decrease in coherence anywhere in the four major areas (P3, T3, T5, O1) and exhibit dyslexia.

Kirtley Thornton has gone beyond Jon’s minimal activation approach to a three-hour neuropsychological evaluation to identify how particular LDs differ in their activation patterns from what is standardly seen in normals under the same challenges. The deviant linkages are then trained with Lexicor-based coherence training. Unfortunately, Kirtley was not available to present his work.

There were two presentations on protocol-based approaches to synchrony training: Les Fehmi’s five-channel synchrony training and our own inter-hemispheric bipolar desychronization training. Les started out early on with a rather single-minded focus on alpha synchrony-training. This thrust bore fruit among other things in Adam Crane’s development of the Capscan, the first computerized EEG trainer on the market. With our appearance on “The Home Show” in January of 1993 Les became aware of our work and adopted the beta/SMR training as well. Over time, however, he has gravitated back toward the alpha synchrony training as a first resort for most clients. The beta/SMR training may be inserted a later time.

In order to do this training with maximum efficiency, Les has developed an analog EEG front-end that combines the signals from five channels and allows EEG reinforcement over a 40-Hz range rather than just at alpha. Bandwidth is selectable. The unit can serve as a front end for a variety of other systems. The client is reinforced for every cycle that the threshold is exceeded via both a strobe and an auditory signal. Those who are left hemisphere-dominant learn to produce alpha more easily nwith auditory feedback better than with visual, and vice versa. So it is just best to provide both kinds of signals. This serves not only as a reinforcement but also as a direct stimulus. The phase of the stimulation can be adjusted for maximum impact, much as a swing is optimally reinforced at a very specific phase. I personally found myself falling into a pattern of “gripping the signal,” which is hardly conducive to the more “open focus” states Les is seeking in alpha. It seems to me that tactile feedback might provide a way out. The somatosensory response can track most of the EEG frequencies of interest, so the response can still be phase-sensitive; and yet it is much more difficult to “lock onto” a tactile than a visual signal.

Les readily acknowledges that it may be difficult to set up a situation where someone systematically increases his alpha, or any other frequency for that matter. But that is not an indictment of the approach. The training gives one the direct experience of a different way of attending to the world, and that capability carries forward even as explicit EEG training effects may slide beneath recognition. The attentional process is regarded on two axes: One ranges from narrow or directed attention to diffuse or broad attention. The other axis ranges from an objective or remote kind of attention to an immersed or absorbed kind of attention. Our preoccupations in life shape us toward particular attentional styles, at some cost to autonomic regulation, arousal level, and stress tolerance. Les used to emphasize the kinship between attentional style and arousal level more than he did on this occasion, but the connection still holds.

Alpha is not always an index of good functioning. It can also indicate a lack of flexibility (“stuck alpha”), and it can be part of the body’s analgesic response. Or it can be part of a dissociative PTSD response. A combination of up- and down-training is often used to induce a greater flexibility of responding. The two extreme styles (narrow and objective versus diffuse and absorbed) may involve different aspects of the complex alpha rhythm, with the latter dominating at a lower frequency. The phase may also be crucially involved, in that it can delineate what we may experience as self versus the other at any moment. This simple identification can explain the profound, boundary-breaking, unifying experiences that may occur in meditation or in alpha training. An expansive domain of high coherence seems to be more the issue here than the specific frequency involved. Indeed, Fehmi’s new instrumentation will allow training at any common EEG frequency with a mere dial selection, with the five-channel synchrony mode assuring that only the synchronous component of the signal will be reinforced throughout. With a unitary phase defining the domain of the experience of “self,” it follows that the awareness of the boundary of self and the other involves the interference pattern in the region where different phases abut each other.

At so many levels we encounter the brain’s regulatory function at the highest levels of synaptic transport to be involved with the management of neuronal assemblies in terms of their spatial extent, of their width in frequency space, and of their temporal properties of waxing and waning. The phase may be the defining variable with respect to both the spatial boundaries of the ensemble locally, and of relationships with other assemblies distally.

Working overtly with attention may be the equivalent in neurofeedback of working with the breath in biofeedback. It is both a voluntary and an involuntary activity, and we are in a position to increase our voluntary contribution purposefully for a time, or even for all time. This also provides perhaps our most direct experience of the brain’s attempt to regulate itself. By bringing our attention to the process of attention, we enhance–if only momentarily–the hierarchy of regulation. And then we find that learning will in fact have occurred.

In my own presentation, I talked about the “small-world” model of networks in which neuro-regulation must be analyzed in terms of a hierarchy of control. The control of timing of neuronal assemblies must then be seen as a distributed network function to which a variety of structures contribute. The hierarchical nature of the organization means that we must look to the earliest (in the evolutionary sense) and the most centralized structures for their key contribution to the organization of brain timing, even though that contribution may be veiled behind the more obvious activity of our glorious neocortex.

The bipolar training at homologous sites can be interpreted as a challenge to the brain to desynchronize. This can be seen in Figure 1, where we are operating at a zero degree phase angle (on that plot) such that synchronous signals are entirely suppressed. As signals deviate from a condition of synchrony they become “visible” to the measurement, and at sufficiently large deviations will be reinforced. Thus the bipolar training will reward “anything besides synchronous activity.” Hence it has the effect of challenging the EEG to desynchronize.

Frequency-based reward training can then be seen as a subtle disturbance out of the prevailing state of the brain. The brain yields to this intervention in first instance, but it cannot allow its own state to be arbitrarily changed, so it mounts a response. The neurofeedback challenge is therefore intrinsically bilateral, involving both a provocation in one direction and a response in the opposite direction. That being the case, it may be an issue of only second-order importance as to the direction in which the initial challenge is applied. If the initial displacement is in the direction toward normalcy, we would invoke a “normalization model.” If the initial displacement is either random or performed in the absence of any known deviations, we would invoke an “exercise model.” And if the displacement is in the opposite direction of our intended objective, we would invoke a “homeopathy model.” Collectively these constitute the full palette of the “Generalized Self-Regulation Model.”

In this manner it can be explained that the apparently divergent and incompatible objectives of Les Fehmi’s approach and our own can both yield the modest objective of improved self-regulation by the brain. This is a rather broad terrain. First of all, our rather considerable overlap with psychopharmacology in terms of efficacy surely is traceable to the central role that is played by the brainstem in neuro-regulation, irrespective of whether our viewpoint is neuro-chemical or bio-electrical. The same probably accounts for our robust efficacy in migraine, and for our effectiveness, such as it is, for narcolepsy. At yet another level, protocol-based training can be very helpful for the basal ganglia issues such as Tourette Syndrome and Parkinson’s. And protocol-based training covers all of the bases in emotional disregulation such as we see in PTSD, sociopathy, Reactive Attachment Disorder, the autism spectrum, and addiction. This covers most of what is likely to walk into the office of a mental health practitioner during the course of a career.

What differentiates the various (generalized) techniques is at a more subtle level of attentional style and of dimensions of awareness. At this point we are beyond merely keeping people migraine-free and into questions of the overall quality of mental functioning. By the same token, the specific coherence-based training is not usually required to achieve basic brain stability or arousal, attention, and affect regulation. But it can address specific learning deficits and the localized deficits that may follow from stroke and traumatic brain injury or the dementias.

A comprehensive practice would therefore try to provide for both types of capabilities. It is realistic to suppose, however, that practitioners will retain their primary therapeutic style, and that now includes their way of doing neurofeedback. The vast majority of currently active practitioners have come to the field through protocol-based or mechanisms-based training. What is the natural growth path for such practitioners? We ourselves are at this moment in mid-stride toward the adoption of dual-channel training. That transition opens up two evolutionary pathways. One is to the adoption of a more comprehensive inhibit strategy, and the other is explicit attention to the linkages between sites through tracking of coherence throughout the training process.

This new focus on the trends in coherence throughout the training brings together EEG assessment and neurofeedback training into a near real-time relationship in the clinic, which breaks one of the huge bottlenecks associated with QEEG-based training, namely the delays in getting the requisite information back into the clinic. (“Just what are you going to do while you are waiting for your Q?”) We have here an entry path into more quantitatively-based training that places only minimal additional demands on the clinician.

In this manner, clinicians can continue to operate in their usual fashion, optimizing their main approach through small adjustments that all lie within the paradigm. At the same time, an effort can be made to adopt at least one other technique that lies largely outside of their existing paradigm, simply in order to have a continuing independent vantage point from which to regard their own work. Practitioners who have come to the field through QEEG-based training are increasingly adopting more dynamically-based training as a complement, and the same should be happening in the other direction. Coherence-based training offers perhaps the most congenial port of entry.

In the above, we have made the case that all narrow-band reward-based training can be regarded as a modest challenge to the brain. But it is also in the nature of any such challenge to serve as a constraint upon the brain. Clinicians must always balance the positive clinical effects of a particular challenge with the costs that may be exacted by any constraints that may be imposed. This appraisal is made most honestly from the perspective of an entirely different technique where this tradeoff would be very different.

Over the longer term, there will be growth to more comprehensive capabilities. Two more talks at the conference spoke to that issue. Bill Hudspeth talked about his “database-free” analysis of the coordination between the different sites based on analysis of coherence between all of the site pairs. And David Kaiser covered the same ground with respect to comodulation, the correlation between sites in the amplitude domain (and typically calculated at the dominant frequency). On the basis that working with the comodulation measure has been so productive both conceptually and practically, David is not at all ready to concede that our current fascination with phase will endure. Indeed it is not easy to separate the variables.

Bipolar placement, particularly at near-neighbor sites that have a lot of shared activity, effectively serves as a transducer of phase information into net amplitude (see Figure 1 around the zero degree phase point). Once that occurs, the variables are not even separable any more. Hence bipolar placement can be seen as bringing phase into play even more as a training variable. It is quite possible that this accounts for the clinical impression that bipolar placement may be slightly stronger in its training effect than referential placement. And it is also possible that if phase was not important before, then bipolar placement has made it so.

There is one application where I believe an emphasis on phase is to “over-constrain” the system. It is in the matter of two-person training. Les Fehmi uses his new instrument with couples, for example, and asks them effectively to synchronize their alpha activity to produce rewards. This situation is tailor-made for comodulation-based training, it seems to me. Surely it is enough that couples experience simultaneous occurrences of their alpha spindles. It may be too much to ask that both also operate at the same alpha frequency.

The barriers to a fuller utilization of Hudspeth’s and Kaiser’s analysis of the EEG are practical ones. One looks forward to the day when a 19-electrode cap can be placed without delay and without goop. An algorithm could readily be devised by means of which coherence-based training can be delivered largely under software control. In the meantime, a lot can already be achieved with only two channels, or four, or six.

In addition to the formal program of the conference, Mike Gismondi asked Marty Wuttke to talk about the program he has developed in the Netherlands. Marty talked about his own journey, involving first of all with his own addiction to drugs, his subsequent involvement with addictions treatment as a provider, and then the addition of neurofeedback (Capscan). He also talked about the birth of his CP child, for whom he sought the help of Margaret Ayers (“as the only one who would train children before the age of 7”). By now his son is 12 years old, and is cognitively in good shape, although there are still motor control issues.

Marty’s intensive two-week program in the Netherlands is now aiming at the exploration of the dimensions of consciousness and toward spiritual transformation, a pathway reminiscent of his own journey out of addiction. He sees the addiction model as more broadly applicable to people who are stuck in certain patterns of responding, although the focus of his work is no longer addiction per se. The spiritual awakening is first of all a program to expose and diminish the interferences to authentic being. The program is heavily biased toward the experiential. Neurofeedback is done twice a day, with an emphasis on alpha synchrony training and on training of pre-frontal function.

Figure 1. The plot illustrates the net amplitude that would be seen for two signals at the given phase angle in a bipolar configuration. Each signal is of magnitude 100. Maximum net signal is reached at 180 degrees in phase difference, and zero signal is seen at zero phase difference. Near zero degrees the net signal has a strong dependence on relative phase, thus promoting desynchronization in a feedback configuration. Near 180 degrees the net signal has a very soft dependence on relative phase. The latter property allows instantaneous synchrony measures to be a reasonable surrogate for instantaneous coherence measures for training purposes.