Endogenous Neuromodulation in the Larger Perspective of Neurofeedback

by Siegfried Othmer | July 28th, 2023The Deep Roots of Endogenous Neuromodulation

The method of Endogenous Neuromodulation has come to dominate our work in neurofeedback. It is characterized by the absence of any discrete reinforcers, the distinguishing feature of the operant conditioning model. We still rely on a complementary inhibit scheme, but the discrete markers here are mere cues to the brain as to its state of dysregulation. They do not offer a prescription for change. The response is left to the discretion of the brain. In endogenous neuromodulation, this principle is extended more broadly: the response to the brain-derived signal is left entirely to the discretion of the brain itself.

As we regard our 38-year development trajectory in this field retrospectively, it is apparent that the element of endogenous neuromodulation was always present, even as we were still formally engaged with the operant conditioning model. First and foremost, there was the incorporation of EEG dynamics at the training frequency prominently in the feedback signal. In connection with his work on sensory substitution, Paul Bach-y-Rita was prompted to say: “If you give the brain any information about itself, it will make sense out of it.” If the brain is given information on its own dynamics, it will take advantage, and we refer to this aspect appropriately as endogenous neuromodulation. Here’s the early history:

Centrality of EEG Dynamics in Feedback

In March of 1985 Sue Othmer and I had our first exposure to neurofeedback at the Beverly Hills office of Margaret Ayers, who was in clinical practice with Sterman’s laboratory instrument and with methods first learned in Sterman’s laboratory and then adapted. Sue experienced a definite first-session effect, the so-called ‘clear-windshield’ effect. I responded positively in various respects within four sessions. However, in my own training the reward incidence was very low even at the most sensitive setting of the threshold. This was because I have a very low-amplitude EEG. Furthermore, I gained the sense of having some control over the rate of rewards, which on later reflection was likely due to my subconscious generation of neck tension to advance the cause of generating rewards. Since my manifest gains could not be credited to the corrupted reward stream, they had to be traceable to the fact that my brain was mainly entertained by the fluctuating green light, which tracked the dynamics of the reward band: Endogenous Neuromodulation!

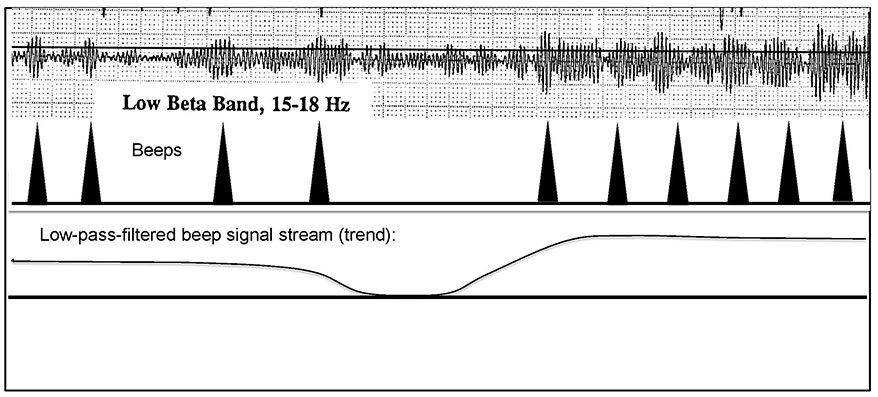

Display of the EEG dynamics at the training frequency has therefore always been a prominent feature of our feedback designs, across three generations of instruments: NeuroCybernetics, EEGer, and Cygnet (and this then carried over into other instrument designs such as Brainmaster). The scrolling clinician display for the NeuroCybernetics is illustrated in Figure 1, as recorded on a thermal printer. The origins of the display of the dynamics, however, date back to the original research instrument developed by M. Barry Sterman. In consequence, it may well have been the case that the discrete feedback events were rarely the main event, as seen in the brain’s perspective, even in Barry’s early work with human subjects. So how did all this come about?

Clinician display of the raw EEG and three bands being tracked through the training: the theta band, 4-7 Hz; the training band, either 12-15 Hz or 15-18 Hz; and the upper beta band, 22 – 30 Hz.

The centrality of operant conditioning in therapeutic neurofeedback is traceable to its origins in animal work. In the original work with cats, the target bursting behavior in the SMR band—which indexed motoric stillness—was readily distinguishable from EEG background activity. The training was event-based operant conditioning in its essence. The discreteness that was seen in cat brains was no longer available for the SMR-training of human brains, however, so in emulation of the procedure with cats, thresholds were kept high in order to reward only rare, high-amplitude excursions in the SMR band. These were extrema in a continuous distribution.

This meant that rewarded events would necessarily be sparse. Both Sterman and Lubar insisted that threshold be chosen so that rewards were relatively rare (e.g., <16/minute–Lubar). Under such conditions, there is a premium on the avoidance of ‘false’ rewards. For that purpose, Sterman installed inhibits on transient excursions into dysregulation to make sure that the reward signal was not either itself compromised by extraneous “within-band” activity or temporally coincident with a transient dysregulation event showing up elsewhere in the spectrum. For example, scalp muscle activity might very well play into the SMR-band and inappropriately trigger a reward. And if a theta burst occurred concurrently with an SMR-burst, both would effectively be ‘rewarded.’ In consequence of the resulting sparseness of valid rewards, the training process was relatively tedious, particularly for a young child being made to train for his ADHD.

Emergence of high reward incidence

Over the course of months to years of clinical experience, Margaret Ayers had been shaped in her behavior to be ever more generous in reward incidence for the sake of the long-suffering clients, and she was rewarded with improved results. Eventually, reward incidence of even 75-85% was not unheard of. Consider the implications: If the typical ‘behavior’ of the EEG-band was being routinely rewarded, what is the impetus for change? And yet children responded better than when confronted with greater challenges (i.e., lower reward incidence). With the transition from analog to computerized instrumentation that we undertook in our organization in 1985 with the NeuroCybernetics, we went back to first principles and revisited this issue.

Within the frame of information theory, a fifty percent reward incidence yields the greatest number of transitions and thus maximizes our data rate. Upon some further investigation we adopted the Ayers approach of generous reward incidence. It clearly gave better results and made for less frustrated clients. Threshold setting were made adaptive (with long time constants) to maintain a relatively invariant level of difficulty, and the same was done for the inhibits. Discrete auditory rewards (‘beeps’) were rate-limited at a maximum of 2/sec, following the Sterman design.

Dynamics in the Infra-Low Frequency Regime

Under conditions of high reward incidence, the burden on the client was to maintain a continuous run of beeps, maintaining the pleasant cadence of 2/sec. This had the effect of reversing the classic paradigm: the attended event was no longer the individual reward but rather the dropout of the beeps. Attention had now shifted from text—the discrete event—to context, the broader sweep of local cortical activation, as reflected in the ebb and flow of the incidence of beeps. This is illustrated in Figure 2. In retrospective view, infra-slow fluctuations in cortical activation had been rendered visible to the brain via an operant conditioning design—albeit one that violated all the rules. Irony of ironies, the rewards of an operant conditioning design had been recruited by the brain in the service of endogenous neuromodulation in the infra-low frequency regime.

The training band is replicated here from Figure 1, with a threshold indicated. A corresponding beep rate is indicated, along with a low-pass-filtered analog of the time course of beep incidence, which falls into the infra-low frequency regime.

So here we have a second element of the process of endogenous neuromodulation. Its clinical relevance was rendered unambiguous when we were working with blind clients and highly impaired young children who weren’t watching the screen. The response to the training could be just as rapid as when the EEG dynamics was in the feedback loop as well. We lacked an explanatory model for such a rapid response until we entered the infra-low frequency (ILF) regime in 2006. And therein lies the answer to the question posed above: Where is the impetus for change? The brains of many trainees had over the course of time shaped clinician behavior to act in their interest: providing actionable information on dynamics in the upper ILF regime, as reflected in the time course of the amplitude of the target frequency.

The Optimal Response Frequency Paradigm

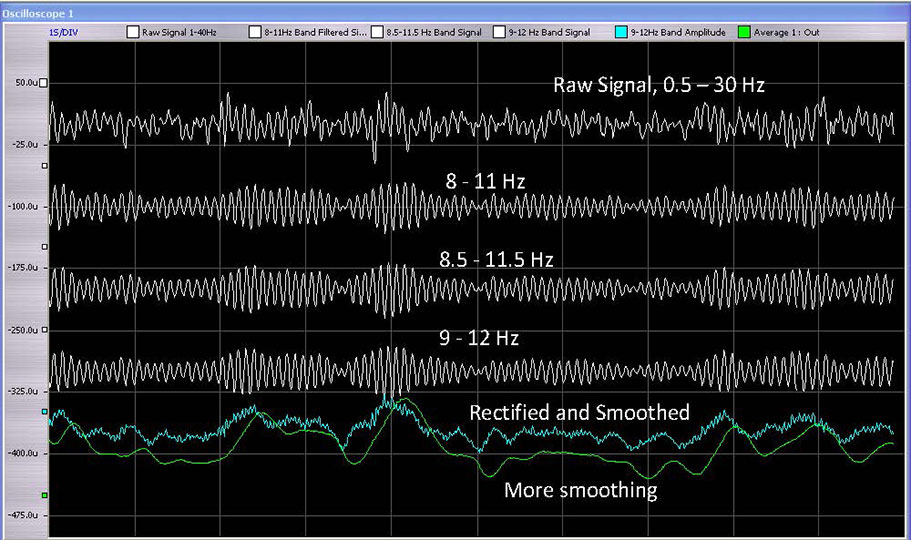

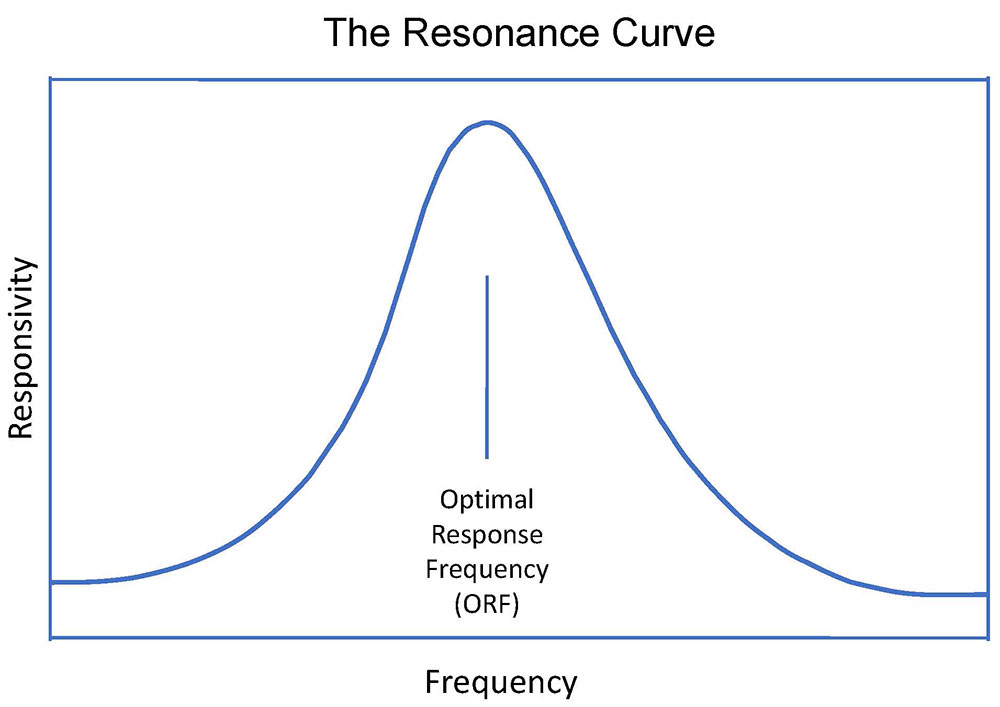

The discovery of the principle of Optimal Response Frequency (ORF) within the EEG range further consolidated the proposition that the brain is revealed to itself through its dynamics. It is only by way of the dynamics that two adjacent frequencies are readily distinguishable in the real-time environment of neurofeedback training. This is illustrated in Figure 3. The ability to discriminate closely spaced frequencies is substantially heightened in the vicinity of the ORF, at which the training proceeds at maximal rate, and the trainee feels better regulated than at adjacent frequencies. This is illustrated in Figure 4, which shows the response curve as a function of frequency. The width of the curve at half-height is on the order of 5-10% of the center frequency, which forces the conclusion that we are dealing with a resonance phenomenon. This, in turn, prompts the conjecture that critical frequencies are being identified that underlie the organization of the entire frequency domain. The resonance response characteristic will be taken up in another newsletter.

Filtered signals are shown for three adjacent and over-lapping bands. It is difficult to tell the difference by observation, but the brain may have no difficulty discriminating between them under certain conditions. This demonstrates the level subtlety at which the brain engages with its own signal.

The brain’s ability to discriminate nearby frequencies is accentuated at special frequencies that we refer to as optimal response frequencies. In view of the narrowness of the width of the curve, we are dealing here with a resonance phenomenon.

Three complementary feedback paths

The EEG dynamics at the training frequency, most efficiently at the ORF, inform the brain of the context in which the discrete rewards occur. In addition to the information flow at the ORF, there is the excursion into the ILF regime that the brain must be picking up on with the ebb and flow of beeps. The signal bandwidth extends from 0.3 Hz down to 1mHz. The upper limit is given by the 2/sec maximum beep rate, and the lower limit is given by the 3-minute interval of automatic adjustment of the threshold level. Finally, the discrete rewards have not ceased to be relevant. We therefore have three complementary feedback modes rather than one.

Presentation of EEG dynamics in feedback in Sterman’s original research instrument was paradoxical, in that Barry Sterman was a Skinnerian purist with respect to operant conditioning experimental design. The paradoxical aspect is reinforced when it is noted that later feedback designs—the football game, for example—did not include feedback on the dynamics, but rather showed only the discrete rewards. Also, Sterman never drew attention to this aspect of the feedback signal in any of his lectures. Largely due to his influence, the emerging neurofeedback discipline remained in the grip of the classic operant conditioning paradigm, and therefore was never able to articulate an alternative perspective. We likewise did not discount the relevance of the discrete rewards in our teaching. It was only with our excursion into the ILF regime, in the absence of any discrete rewards, that the reality of endogenous neuromodulation as the operative model became inescapable. The operant conditioning aspect of the training had dropped away, so the model simply no longer applied.

Operant conditioning had provided the intellectual scaffolding for neurofeedback throughout the history of the field, but it had never been a competent explanatory model for the most effective clinical neurofeedback. Endogenous neuromodulation has likely been a prime mover all along, in combination with a robust inhibit design. Ironically, Sterman had sown the seeds of that development in his own instrumental design—and no doubt benefited accordingly in his clinical research on epilepsy in human subjects.

The Role of the Inhibits

Nearly all conventional neurofeedback also provides for the cueing of the brain with respect to transient excursions into dysregulation, such as brief bursts of excessive theta-band or gamma-band amplitudes. Many a neurofeedback therapist has suspected this ‘inhibit’-training aspect of the protocol to have been the more important in the standard frequency-based protocols for many of their clients. Even with our much more impactful training in the infra-low frequency domain, the inhibit aspect of the protocol makes a substantial, and perhaps indispensable (i.e., complementary) contribution. It should be noted that nothing is really being inhibited in this process. The term derives from the fact that the early instantiation was by way of inhibiting inappropriate rewards. The inhibits constitute a fourth feedback path.

Evidence for all the above is provided by the fact that the NeuroCybernetics yielded clinical results that were clearly superior to those facilitated by other instruments available in those early days. Within a few years of its appearance in the marketplace in 1989, Autogenics abandoned the neurofeedback field entirely. Lexicor likewise stopped selling neurofeedback instruments, and decided to concentrate on its mapper. Both Lubar and Sterman were skeptical of our clinical reports in those early years, a clear indication that they were not seeing similar results.

The Relative Poverty of Formal Published Research

The above may also help to illuminate one of the ongoing paradoxes in the field, the observation that academic studies have tended to fall short of what is being achieved clinically. In at least a number of those studies care was taken to implement a ‘clean’ operant conditioning design, and even the inhibit aspect of the protocol might be dispensed with in that cause. In consequence, there is very little linkage with what has evolved in the clinical arena, and with what has come to constitute good practice. Reliance on impoverished reinforcement schema, combined with the modest number of training sessions typical in research designs, and operating in sterile settings that minimize the confound of human factors, has led to a serious underestimation of the brain’s capacity for self-recovery under more ideal conditions in clinical settings.

Plainly, group studies with manualized protocols are not able to adjudicate issues of efficacy of highly individualized training schema. Whereas the method of endogenous neuromodulation is nomothetic in design, it is idiographic in execution. Studies would typically be organized according to the formal protocol (nomothetic) aspects of the approach, whereas clinical success is secured by way of the individualization of the training, the idiographic aspects.

With operant conditioning, we are in narrow focus on a particular objective while being relatively blind to the context in which the training process unfolds. Yet ideally our training should be exquisitely context-sensitive, and at best that means the brain should chart the course to the greatest degree possible. In the ILF regime, we have no alternative but to let the brain take the lead. But the principle holds more generally, and indeed it has been in play all along. A top-down process has been surreptitiously hijacked by the brain and gradually shaped into a bottom-up process that is more to its liking. In the current idiom, one might say that the brain effected a paradigm escape.

This entire developmental thrust went against the prevailing regimented culture in both academic research and clinical practice, so the evolution of the method had to take place in an unusually permissive environment, one sufficiently isolated to provide a buffer against withering criticism—the scientifically guided clinical practice. At the same time, we have always been open about what we were doing through our professional training courses. The work proceeded in the tradition of observational, naturalistic science, effectively yielding an ‘ethology of brain behavior,’ in the spirit of Konrad Lorenz and Nikolaas Tinbergen. Such a departure is most fruitfully executed by outsiders from a discipline, as their vision is less blinkered.

At the same time, the rules were always observed in that deviation from existing practice was typically by way of incremental steps, and these were resorted to only when the established methods were unavailing. There were only occasional leaps, in the spirit of the ‘punctuated equilibrium’ theory of evolution of Stephen Jay Gould and Niles Eldridge. One was the discovery of the principle of optimal response frequency (ORF) in the late nineties, and the second was the incursion into the infra-low frequency regime with a frequency-based technique in 2006. Throughout, the mandate was always good clinical practice rather than zeal for pushing the frontiers.

Siegfried Othmer